Introduction

The artificial intelligence revolution has fundamentally transformed data center cooling requirements. As organizations deploy increasingly powerful GPUs and specialized AI accelerators to train and run complex models, traditional cooling approaches are reaching their limits. This comprehensive article explores the evolution of data center cooling technologies specifically for AI workloads, examining how cooling solutions have adapted to meet the unprecedented thermal challenges of modern AI infrastructure.

The AI-Driven Cooling Challenge

The exponential growth of AI has created thermal management challenges that were virtually nonexistent just a few years ago.

Problem: Modern AI hardware generates unprecedented heat density that traditional data center cooling was never designed to handle.

Today’s high-performance AI accelerators like NVIDIA’s H100 or AMD’s MI300 can generate thermal loads exceeding 700 watts per device—more than double what previous generations produced just a few years ago. When deployed in dense configurations, these heat loads can create rack densities of 50-100kW or more, far beyond what traditional data centers were designed to support.

Aggravation: The trend toward higher GPU power consumption shows no signs of abating, with next-generation AI accelerators potentially exceeding 1000W.

Further complicating matters, the computational demands driving GPU power increases continue to grow exponentially with larger AI models, creating a thermal trajectory that will further challenge cooling technologies in coming generations.

Solution: Understanding the evolution of cooling technologies enables more effective infrastructure planning and technology selection for AI deployments:

The Thermal Trajectory of AI Hardware

Tracking the rapid increase in cooling requirements:

- Historical GPU TDP Progression:

- Early AI GPU Era (2016-2018): 250-300W TDP

- Middle AI GPU Era (2019-2021): 300-400W TDP

- Current AI GPU Era (2022-2024): 350-700W TDP

- Projected Next-Gen (2025+): 600-1000W+ TDP

- Exponential rather than linear growth pattern

- Deployment Density Evolution:

- Traditional HPC: 10-20kW per rack

- Early AI clusters: 20-30kW per rack

- Current AI deployments: 30-80kW per rack

- Leading-edge AI systems: 80-150kW per rack

- Fundamental challenge to traditional cooling

- Workload Characteristics Impact:

- AI training: Sustained maximum utilization

- Extended run times (days to weeks)

- Minimal idle or low-power periods

- Synchronous operation across multiple GPUs

- Compound thermal effect in clusters

Here’s what makes this fascinating: The thermal output of AI hardware has grown at approximately 2.5x the rate predicted by Moore’s Law. While traditional computing hardware typically sees 15-20% power increases per generation, AI accelerators have experienced 50-100% TDP increases across recent generations. This accelerated thermal evolution reflects a fundamental shift in design philosophy, where performance is prioritized even at the cost of significantly higher power consumption and thermal output.

The Physics of Cooling Limitations

Understanding the fundamental constraints that drive cooling evolution:

- Air Cooling Physical Limitations:

- Specific heat capacity of air (1.005 kJ/kg·K)

- Volumetric constraints on airflow

- Temperature delta requirements

- Fan power and noise limitations

- Practical upper limit around 350-400W per device

- Thermal Transfer Efficiency Comparison:

- Air cooling: Baseline efficiency

- Direct liquid cooling: 3-5x more efficient than air

- Immersion cooling: 5-10x more efficient than air

- Two-phase immersion: 10-15x more efficient than air

- Non-linear efficiency advantage with increasing TDP

- Density and Proximity Effects:

- Thermal coupling between adjacent devices

- Recirculation and preheating challenges

- Airflow impedance in dense configurations

- Compound effect in multi-GPU systems

- Exponential rather than linear challenge

But here’s an interesting phenomenon: The efficiency advantage of advanced cooling over air cooling increases non-linearly with TDP. For 250W GPUs, liquid cooling might offer a 30-40% efficiency advantage. For 500W GPUs, this advantage typically grows to 60-80%, and for 700W+ devices, liquid cooling can be 3-5x more efficient than even the most advanced air cooling. This expanding advantage creates an economic inflection point where the additional cost of advanced cooling is increasingly justified by performance and efficiency benefits as TDP increases.

Performance and Reliability Implications

The critical relationship between cooling and AI system effectiveness:

- Thermal Impact on AI Performance:

- Thermal throttling reduces computational capacity

- Performance reductions of 10-30% during throttling

- Training convergence affected by performance inconsistency

- Inference latency increases during thermal events

- Economic impact of reduced computational efficiency

- Reliability Considerations:

- Each 10°C increase approximately doubles failure rates

- Thermal cycling creates mechanical stress

- Memory errors increase at elevated temperatures

- Power delivery components vulnerable to thermal stress

- Economic impact of hardware failures and replacements

- Operational Stability Requirements:

- AI workloads require consistent performance

- Reproducibility challenges with variable thermal conditions

- Production deployment stability expectations

- 24/7 operation for many AI systems

- Business continuity considerations

Impact of Cooling Quality on AI Infrastructure

| Cooling Quality | Temperature Range | Performance Impact | Reliability Impact | Operational Impact |

|---|---|---|---|---|

| Inadequate | 85-95°C+ | Severe throttling, 30-50% performance loss | 2-3x higher failure rate | Unstable, frequent interruptions |

| Borderline | 75-85°C | Intermittent throttling, 10-30% performance loss | 1.5-2x higher failure rate | Periodic issues, inconsistent performance |

| Adequate | 65-75°C | Minimal throttling, 0-10% performance impact | Baseline failure rate | Generally stable with occasional issues |

| Optimal | 45-65°C | Full performance, potential for overclocking | 0.5-0.7x failure rate | Consistent, reliable operation |

| Premium | <45°C | Maximum performance, sustained boost clocks | 0.3-0.5x failure rate | Exceptional stability and longevity |

Ready for the fascinating part? Research indicates that inadequate cooling can reduce the effective computational capacity of AI infrastructure by 15-40%, essentially negating much of the performance advantage of premium GPU hardware. This “thermal tax” means that organizations may be realizing only 60-85% of their theoretical computing capacity due to cooling limitations, fundamentally changing the economics of AI infrastructure. When combined with the reliability impact, the total cost of inadequate cooling can exceed the price premium of advanced cooling solutions within the first year of operation for high-utilization AI systems.

Traditional Air Cooling: Capabilities and Limitations

Air cooling remains the most widely deployed approach for data center thermal management, though it faces increasing challenges with modern AI hardware.

Problem: Traditional air cooling struggles to effectively dissipate the thermal output of modern AI accelerators, particularly in dense deployments.

The fundamental physics of air cooling—limited thermal capacity of air and constraints on airflow volume—create inherent limitations that are increasingly challenged by GPUs generating 400-700+ watts of heat.

Aggravation: Density requirements for AI clusters exacerbate air cooling challenges by limiting airflow and creating compound heating effects.

Further complicating matters, AI deployments typically cluster multiple high-power GPUs in close proximity, creating thermal interaction effects where the heat from one device affects others, further reducing cooling effectiveness.

Solution: Understanding the capabilities and limitations of air cooling enables more informed decisions about its appropriate application for AI infrastructure:

Traditional Data Center Air Cooling

The evolution of conventional approaches:

- Computer Room Air Conditioning (CRAC):

- Perimeter-based cooling units

- Raised floor air distribution

- Return air paths and considerations

- Temperature and humidity control

- Typical cooling capacity: 3-5 kW per rack

- Computer Room Air Handler (CRAH):

- Chilled water-based systems

- Higher efficiency than direct expansion CRAC

- Separation of heat generation and rejection

- Facility water system integration

- Typical cooling capacity: 5-8 kW per rack

- Hot/Cold Aisle Containment:

- Airflow management optimization

- Prevention of hot/cold air mixing

- Increased cooling efficiency

- Higher inlet air temperature potential

- Typical cooling capacity: 8-12 kW per rack

Here’s what makes this fascinating: Traditional data center cooling was designed for an era when a “high-density” rack consumed 5-8kW of power. Modern AI racks can exceed 80kW—a 10-15x increase that fundamentally breaks the assumptions underlying traditional cooling design. This mismatch creates a situation where facilities designed just 5-7 years ago may be able to utilize less than 25% of their physical rack space for AI workloads due to cooling limitations rather than space constraints.

Advanced Air Cooling Approaches

Pushing the boundaries of air cooling capabilities:

- In-Row Cooling:

- Cooling units placed within server rows

- Shorter air paths

- Targeted cooling for high-density zones

- Reduced mixing and recirculation

- Typical cooling capacity: 12-20 kW per rack

- Rear Door Heat Exchangers:

- Water-cooled door added to standard racks

- Captures and removes heat at rack level

- Compatible with standard servers

- Minimal facility modifications

- Typical cooling capacity: 20-35 kW per rack

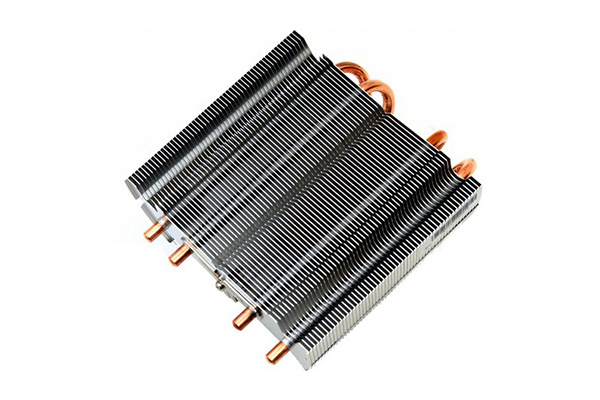

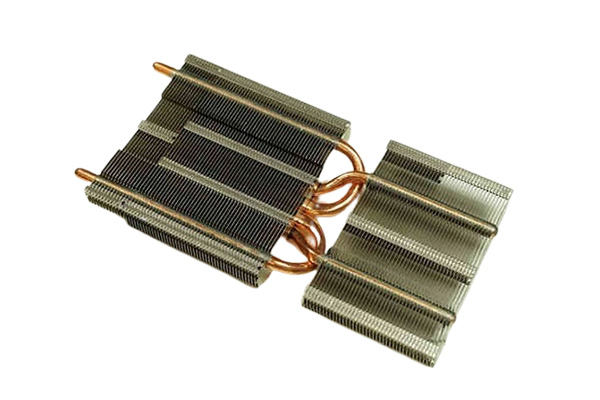

- Direct Chip Air Cooling:

- Advanced heatsink designs

- Heat pipe and vapor chamber technologies

- High-performance fans

- Optimized airflow patterns

- Cooling capacity: up to 350-400W per GPU

But here’s an interesting phenomenon: The effectiveness of advanced air cooling varies significantly with altitude. At sea level, these solutions may effectively cool 350-400W devices. However, at data center locations above 3,000 feet elevation, cooling capacity can decrease by 10-15% due to lower air density. At elevations above 6,000 feet, capacity may decrease by 20-30%, creating situations where cooling solutions that work perfectly in coastal data centers may fail in mountain or high-plateau locations.

Practical Limitations for AI Workloads

Understanding the boundaries of air cooling for AI applications:

- Physical and Practical Limitations:

- Thermal capacity of air (specific heat capacity)

- Volumetric constraints on airflow

- Fan power and noise limitations

- Temperature delta requirements

- Practical upper limit around 350-400W per GPU

- Deployment Density Constraints:

- 250W GPUs: Up to 8 per 2U server (air cooling)

- 350W GPUs: Up to 4-6 per 2U server (air cooling)

- 400W+ GPUs: Maximum 2-4 per 2U server (air cooling)

- Rack density limitations: 15-25kW typical maximum

- Significant spacing requirements between components

- Operational Challenges:

- Dust accumulation and maintenance requirements

- Filter replacement schedules

- Fan failure detection and redundancy

- Airflow monitoring and management

- Temperature variation across devices

Air Cooling Capabilities for AI Hardware

| GPU TDP Range | Cooling Effectiveness | Density Impact | Energy Efficiency | Noise Level | Recommended For |

|---|---|---|---|---|---|

| 200-250W | Good | Minimal constraints | Moderate | Moderate | General-purpose computing |

| 250-350W | Adequate | Moderate constraints | Low-Moderate | High | Budget-constrained deployments |

| 350-450W | Borderline | Significant constraints | Low | Very High | Only when absolutely necessary |

| 450W+ | Inadequate | Severe constraints | Very Low | Extreme | Not recommended |

Ready for the fascinating part? The most sophisticated air-cooled AI deployments are now implementing dynamic workload scheduling based on thermal conditions. These systems continuously monitor temperature across GPU clusters and intelligently distribute workloads to maintain optimal thermal conditions. This “thermally-aware scheduling” can improve effective cooling capacity by 15-25% compared to static approaches, extending the viability of air cooling for higher-TDP devices. However, this approach introduces computational overhead and complexity that must be balanced against the cooling benefits.

The Rise of Liquid Cooling for AI

Liquid cooling has emerged as the preferred solution for high-density AI deployments, offering superior thermal performance and efficiency compared to air cooling.

Problem: The thermal output of modern AI accelerators exceeds the practical capabilities of air cooling, necessitating more effective heat transfer methods.

With thermal densities exceeding 0.5-1.0 W/mm² and total package power reaching 400-700+ watts, modern AI GPUs generate heat beyond what air cooling can effectively dissipate, particularly in dense deployments.

Aggravation: The trend toward higher GPU power consumption shows no signs of abating, with next-generation AI accelerators potentially exceeding 1000W.

Further complicating matters, the computational demands driving GPU power increases continue to grow exponentially with larger AI models, creating a thermal trajectory that will further challenge cooling technologies in coming generations.

Solution: Liquid cooling technologies offer significantly higher thermal transfer efficiency, enabling effective cooling of even the highest-power AI accelerators:

Direct Liquid Cooling Fundamentals

Understanding the principles and implementation of direct liquid cooling:

- Operating Principles:

- Direct contact between cooling plates and heat sources

- Liquid circulation through cooling plates

- Heat transfer to facility cooling systems

- Closed-loop vs. facility water implementations

- Temperature, flow, and pressure management

- Thermal Advantages:

- Water’s superior thermal capacity (4x air)

- Higher heat transfer coefficients

- More efficient transport of thermal energy

- Reduced temperature differentials

- Effective cooling of 600W+ devices

- System Components:

- Cold plates (direct contact with GPUs)

- Manifolds and distribution systems

- Pumps and circulation equipment

- Heat exchangers

- Monitoring and control systems

Here’s what makes this fascinating: The thermal transfer efficiency of liquid cooling creates a non-linear advantage over air cooling as TDP increases. For 250W GPUs, liquid cooling might offer a 30-40% efficiency advantage. For 500W GPUs, this advantage typically grows to 60-80%, and for 700W+ devices, liquid cooling can be 3-5x more efficient than even the most advanced air cooling. This expanding advantage creates an economic inflection point where the additional cost of liquid cooling is increasingly justified by performance and efficiency benefits as TDP increases.

Evolution of Liquid Cooling for AI

Tracing the development of liquid cooling specifically for AI applications:

- Early Adoption Phase (2016-2019):

- Limited deployments for specialized applications

- Custom, non-standardized implementations

- Primarily for HPC rather than commercial AI

- Significant implementation complexity

- Limited vendor ecosystem and support

- Mainstream Transition (2019-2022):

- Growing adoption for high-density AI

- Standardization of components and interfaces

- Commercial product maturation

- Simplified implementation approaches

- Expanded vendor ecosystem

- Current State (2022-Present):

- Standard approach for high-performance AI

- Mature product offerings from major vendors

- Simplified deployment methodologies

- Comprehensive support ecosystems

- Proven reliability and performance

But here’s an interesting phenomenon: The adoption curve for liquid cooling in AI has been approximately 2-3x faster than previous cooling technology transitions in the data center industry. While technologies like hot-aisle containment took 7-10 years to move from early adoption to mainstream implementation, liquid cooling has made this transition in just 3-4 years for AI applications. This accelerated adoption reflects both the critical need created by AI thermal demands and the substantial performance and economic benefits that liquid cooling enables for these workloads.

Implementation Architectures

Different approaches to liquid cooling deployment:

- Direct-to-Chip (Cold Plate) Cooling:

- Cooling plates attached directly to GPUs

- Targeted cooling of highest-heat components

- Other components typically air-cooled

- Balance of implementation complexity and effectiveness

- Most common approach for AI infrastructure

- Complete Liquid Cooling:

- Liquid cooling for all major heat-generating components

- GPUs, CPUs, memory, power delivery

- Minimal or no internal server fans

- Maximum cooling efficiency

- Higher implementation complexity

- Facility Integration Options:

- Direct facility water connection

- Cooling Distribution Unit (CDU) implementation

- Rack-level closed loops

- Hybrid approaches

- Trade-offs between simplicity and isolation

Liquid Cooling Architecture Comparison for AI

| Architecture | Cooling Capacity | Implementation Complexity | Facility Impact | Maintenance Requirements | Best For |

|---|---|---|---|---|---|

| Direct-to-Chip (GPUs only) | High | Moderate | Moderate | Moderate | Balanced approach, mixed workloads |

| Complete Liquid Cooling | Very High | High | Significant | Moderate-High | Maximum density, highest-TDP devices |

| Direct Facility Water | High | Moderate | High | Low-Moderate | Simplicity, lower initial cost |

| CDU with Secondary Loop | High | High | Moderate | Moderate | Isolation from facility water |

| Rack-Level Closed Loop | Moderate-High | Moderate | Low | Moderate-High | Minimal facility impact, flexibility |

Performance and Efficiency Advantages

Quantifying the benefits of liquid cooling for AI:

- Thermal Performance Improvements:

- GPU temperature reductions of 20-40°C

- Elimination of thermal throttling

- Consistent performance under sustained load

- Potential for higher boost clocks

- Support for highest-TDP accelerators

- Energy Efficiency Benefits:

- Reduced or eliminated fan power

- Higher allowable facility temperatures

- Lower refrigeration requirements

- Potential for heat reuse

- PUE improvements of 0.2-0.4 typical

- Density and Scaling Advantages:

- 2-3x higher rack densities possible

- Reduced data center footprint

- Lower infrastructure costs per GPU

- Improved cluster connectivity

- Future-proofing for next-gen hardware

Ready for the fascinating part? The operational reliability of modern liquid cooling systems now exceeds that of traditional air cooling in many deployments. While early liquid cooling implementations raised concerns about leaks and reliability, data from large-scale deployments shows that current enterprise-grade liquid cooling solutions experience 70-80% fewer cooling-related failures than equivalent air-cooled systems. This reliability advantage stems from fewer moving parts (elimination of multiple fans), reduced dust-related issues, and more consistent operating temperatures. This reversal of the traditional reliability assumption is fundamentally changing risk assessments for cooling technology selection.

Immersion Cooling: The Ultimate Solution?

Immersion cooling represents the frontier of thermal management for the most demanding AI workloads, offering unmatched performance and efficiency.

Problem: Even direct liquid cooling faces challenges with the highest-density AI deployments and next-generation accelerators.

As GPU power consumption approaches and potentially exceeds 1000W per device, even traditional liquid cooling approaches face increasing implementation challenges and efficiency limitations.

Aggravation: The trend toward specialized AI hardware with non-standard form factors creates additional cooling challenges.

Further complicating matters, the emergence of custom AI accelerators, specialized AI ASICs, and heterogeneous computing systems creates cooling requirements that traditional approaches struggle to address uniformly and efficiently.

Solution: Immersion cooling provides a comprehensive solution that addresses current extreme cooling requirements while offering headroom for future generations:

Immersion Cooling Fundamentals

Understanding the principles and implementation of immersion cooling:

- Operating Principles:

- Complete immersion of computing hardware in dielectric fluid

- Direct contact between fluid and all components

- Elimination of thermal interfaces for most components

- Convection-based heat transfer within the fluid

- Heat extraction through fluid circulation and heat exchangers

- Thermal Advantages:

- Elimination of hotspots through uniform cooling

- Superior cooling for irregular form factors

- Elimination of air as a thermal transfer medium

- Reduced temperature differentials across components

- Effective cooling regardless of component arrangement

- System Components:

- Immersion tanks and containment systems

- Dielectric cooling fluids

- Circulation and pumping systems

- Heat rejection equipment

- Filtration and fluid maintenance systems

Here’s what makes this fascinating: Immersion cooling fundamentally changes the relationship between component density and cooling efficiency. In traditional cooling, increasing density creates compound cooling challenges as components affect each other’s thermal environment. In immersion systems, cooling efficiency remains relatively constant regardless of component density, enabling theoretical density improvements of 5-10x compared to air cooling. This density advantage creates cascading benefits for facility space utilization, interconnect latency, and overall system performance.

Single-Phase vs. Two-Phase Immersion

Comparing the two primary approaches to immersion cooling:

- Single-Phase Immersion:

- Non-boiling fluid operation

- Circulation-based heat transfer

- Simpler implementation and management

- Lower cooling efficiency than two-phase

- More mature technology with broader adoption

- Two-Phase Immersion:

- Fluid boiling at component surfaces

- Phase-change heat transfer (highly efficient)

- Passive circulation through convection

- Higher cooling efficiency

- More complex fluid management

- Comparative Considerations:

- Cooling efficiency: Two-phase 20-40% more efficient

- Implementation complexity: Single-phase simpler

- Fluid cost: Two-phase typically higher

- Operational experience required: Two-phase more demanding

- Future scaling capability: Two-phase superior

But here’s an interesting phenomenon: The efficiency advantage of two-phase immersion over single-phase varies significantly with heat density. For moderate-density deployments (15-25 kW per rack equivalent), the efficiency difference might be only 10-15%. For extreme density deployments (50+ kW per rack equivalent), the advantage can grow to 30-50%. This variable efficiency delta creates deployment scenarios where single-phase is more economical for moderate deployments while two-phase becomes increasingly advantageous for the highest densities.

Implementation Considerations

Practical aspects of deploying immersion cooling for AI:

- Hardware Compatibility:

- Component selection and qualification

- Server design modifications

- Connector and cabling adaptations

- Storage media considerations

- Warranty and support implications

- Facility Requirements:

- Floor loading capabilities (significantly higher)

- Fluid handling and storage infrastructure

- Heat rejection integration

- Electrical and safety considerations

- Operational space requirements

- Operational Procedures:

- Hardware installation and removal

- Fluid maintenance and monitoring

- Leak prevention and management

- Staff training requirements

- Emergency response protocols

Immersion Cooling Implementation Factors

| Factor | Single-Phase | Two-Phase | Considerations |

|---|---|---|---|

| Initial Cost | $$$$ | $$$$$ | Higher than liquid cooling, significant facility impact |

| Operational Cost | $ | $ | Very efficient, minimal ongoing costs |

| Fluid Cost | $$-$$$ | $$$-$$$$ | Significant initial investment, periodic replacement |

| Density Capability | Very High | Extreme | 5-10x air cooling density potential |

| Implementation Complexity | High | Very High | Specialized expertise required |

| Hardware Compatibility | Good | Moderate | Some components may require modification |

| Maintenance Complexity | Moderate | High | Specialized procedures and training |

| Future Scalability | Excellent | Outstanding | Virtually unlimited thermal capacity |

Economic and Performance Benefits

Quantifying the advantages of immersion cooling for AI:

- Thermal Performance:

- GPU temperature reductions of 30-50°C vs. air cooling

- Complete elimination of thermal throttling

- Maximum boost clock sustainability

- Potential for safe overclocking

- Support for any foreseeable TDP increases

- Energy Efficiency:

- PUE improvements to 1.03-1.15 (vs. 1.4-1.8 for air)

- Elimination of all server fans

- Higher facility temperature operation

- Potential for heat recovery and reuse

- 40-60% total energy reduction possible

- Total Cost of Ownership Impact:

- Higher initial capital expenditure

- Significantly lower operational costs

- Extended hardware lifespan

- Increased computational density

- Potential for 20-40% lower 5-year TCO

Ready for the fascinating part? Immersion cooling is enabling entirely new approaches to system design that were previously impossible. With the elimination of traditional cooling constraints, some manufacturers are developing “cooling-native” hardware that abandons conventional form factors and thermal design limitations. These systems can achieve component densities 3-5x higher than traditional designs while simultaneously improving performance through shorter signal paths and more efficient power delivery. This fundamental rethinking of system architecture represents a potential inflection point in computing design, where thermal management becomes an enabler rather than a constraint for system architecture.

Hybrid Approaches for Transitional Deployments

Hybrid cooling strategies combine multiple technologies to optimize performance, efficiency, and implementation complexity.

Problem: No single cooling technology is optimal for all components and deployment scenarios.

Different components within AI systems have varying thermal characteristics, form factors, and cooling requirements that may be better addressed by different cooling technologies.

Aggravation: The heterogeneous nature of modern AI infrastructure creates complex cooling requirements that single-technology approaches struggle to address optimally.

Further complicating matters, AI infrastructure increasingly combines different processor types, accelerators, memory technologies, and storage systems, each with unique thermal characteristics that may benefit from different cooling approaches.

Solution: Hybrid cooling strategies leverage the strengths of multiple technologies to create optimized solutions for complex AI infrastructure:

Targeted Liquid Cooling

Applying liquid cooling selectively to high-heat components:

- Implementation Approaches:

- GPU-only liquid cooling with air for other components

- CPU+GPU liquid cooling with air for supporting systems

- Component-specific cooling plate designs

- Integration with traditional air cooling

- Simplified liquid distribution compared to full liquid cooling

- Advantages and Limitations:

- Reduced implementation complexity vs. full liquid cooling

- Lower cost than comprehensive liquid solutions

- Addresses highest thermal loads directly

- Maintains compatibility with standard components

- Potential for uneven cooling across system

- Ideal Application Scenarios:

- Mixed-density AI infrastructure

- Retrofitting existing infrastructure

- Gradual transition strategies

- Budget-constrained implementations

- Moderate-density deployments

Here’s what makes this fascinating: Targeted liquid cooling often provides 80-90% of the benefits of comprehensive liquid cooling at 50-60% of the implementation cost and complexity. This favorable cost-benefit ratio makes it an increasingly popular approach for organizations transitioning from traditional infrastructure to AI-optimized cooling. The selective application of advanced cooling to only the highest-value, highest-heat components creates an efficient “cooling triage” that maximizes return on cooling investment.

Rear Door Heat Exchangers

Combining traditional air cooling with liquid-based heat capture:

- Operating Principles:

- Standard air-cooled servers and racks

- Water-cooled heat exchanger in rack door

- Hot exhaust air passes through heat exchanger

- Heat captured and removed via liquid

- Cooled air returned to data center

- Implementation Variations:

- Passive (convection-driven) vs. active (fan-assisted)

- Facility water vs. CDU implementations

- Varying cooling capacities (20-75kW per rack)

- Containment integration options

- Retrofit vs. new deployment designs

- Advantages and Limitations:

- Minimal changes to standard IT hardware

- Simplified implementation compared to direct liquid cooling

- Moderate improvement in cooling efficiency

- Limited maximum cooling capacity

- Potential for condensation in some environments

But here’s an interesting phenomenon: The effectiveness of rear door heat exchangers varies significantly with rack power density. At moderate densities (15-25kW per rack), they typically capture 80-90% of heat output. As density increases to 30-40kW, effectiveness often drops to 60-70% due to airflow constraints and heat exchanger capacity limitations. This declining efficiency with increasing density creates a practical ceiling that makes rear door heat exchangers ideal for transitional deployments but potentially insufficient for the highest-density AI clusters.

Zoned Cooling Approaches

Implementing different cooling technologies in different data center zones:

- Zone-Based Deployment Strategies:

- High-density zones with advanced cooling

- Standard zones with traditional cooling

- Transitional zones with hybrid approaches

- Future expansion zones with flexible infrastructure

- Optimized resource allocation based on workload requirements

- Infrastructure Considerations:

- Separate mechanical systems for different zones

- Unified monitoring and management

- Flexible capacity allocation

- Phased implementation potential

- Operational efficiency optimization

- Workload Placement Optimization:

- Thermal profile-based workload assignment

- Dynamic resource allocation

- Performance requirement matching

- Efficiency optimization

- Utilization balancing

Hybrid Cooling Strategy Comparison

| Strategy | Implementation Complexity | Performance Benefit | Cost Efficiency | Flexibility | Best For |

|---|---|---|---|---|---|

| Targeted Liquid Cooling | Moderate | High | Very High | Good | Optimizing existing infrastructure |

| Rear Door Heat Exchangers | Low | Moderate | High | Very Good | Transitional deployments, mixed density |

| Zoned Cooling Approach | Moderate-High | Very High | High | Excellent | Large-scale, diverse workloads |

| Phased Implementation | Moderate | High | Moderate-High | Very Good | Budget constraints, incremental adoption |

| Technology Mixing | High | Very High | Moderate | Good | Specialized requirements, maximum performance |

Migration and Transition Strategies

Approaches for evolving cooling infrastructure over time:

- Phased Implementation Approaches:

- Pilot deployments and proof of concept

- High-value target identification

- Incremental expansion strategies

- Technology evaluation and validation

- Long-term roadmap development

- Retrofit vs. New Build Considerations:

- Existing facility constraints

- Disruption minimization strategies

- Cost-benefit analysis for different approaches

- Performance improvement potential

- Operational impact management

- Operational Transition Planning:

- Staff training and skill development

- Procedure development and documentation

- Monitoring and management integration

- Maintenance program adaptation

- Emergency response planning

Ready for the fascinating part? The most successful hybrid cooling implementations are now using AI techniques to optimize their own operation. These systems collect thousands of data points across cooling subsystems and use machine learning to predict thermal behavior, optimize resource allocation, and proactively adjust to changing conditions. These “AI-optimized cooling systems” have demonstrated 20-35% efficiency improvements compared to traditional control approaches while simultaneously improving cooling performance and reliability. This represents a fascinating case of AI technology being applied to solve challenges created by AI hardware itself.

Facility Considerations for AI Cooling

The facility infrastructure supporting AI cooling systems is critical to their effectiveness and reliability.

Problem: Advanced cooling technologies for AI create significant facility requirements that many existing data centers cannot support without modification.

The high heat density, liquid distribution requirements, and specialized infrastructure needs of advanced cooling technologies often exceed the capabilities of facilities designed for traditional IT workloads.

Aggravation: Retrofitting existing facilities for advanced cooling can be disruptive, expensive, and sometimes physically impossible due to fundamental constraints.

Further complicating matters, many organizations attempt to deploy advanced AI infrastructure in facilities designed for much lower power densities, creating mismatches between cooling requirements and facility capabilities that limit performance and reliability.

Solution: Understanding facility requirements for different cooling approaches enables more effective infrastructure planning and deployment:

Power Infrastructure Requirements

Supporting the electrical needs of AI cooling:

- Power Density Considerations:

- Traditional data centers: 4-8 kW per rack

- Early AI deployments: 10-20 kW per rack

- Current AI clusters: 20-50 kW per rack

- Leading-edge AI systems: 50-100+ kW per rack

- Power distribution and circuit sizing implications

- Power Quality and Reliability:

- UPS requirements for cooling systems

- Backup power for pumps and circulation

- Power monitoring and quality management

- Fault detection and protection

- Graceful shutdown capabilities

- Power Distribution Architecture:

- Busway vs. traditional power distribution

- Circuit capacity and redundancy

- Phase balancing considerations

- Future expansion accommodation

- Monitoring and metering integration

Here’s what makes this fascinating: The power density of AI infrastructure has increased so dramatically that it’s creating fundamental shifts in data center power architecture. Traditional power distribution approaches using under-floor cabling are often physically incapable of delivering the required power density, driving adoption of overhead busway systems that can support 5-10x higher power density. This architectural shift represents one of the most significant changes in data center design in the past 20 years, driven primarily by AI cooling requirements.

Mechanical Infrastructure Considerations

Supporting the thermal management needs of AI cooling:

- Heat Rejection Requirements:

- Total thermal load calculation

- Peak vs. average heat rejection needs

- Redundancy and backup considerations

- Seasonal variation planning

- Growth and expansion accommodation

- Liquid Distribution Infrastructure:

- Primary and secondary loop design

- Piping material and sizing

- Pumping and circulation systems

- Filtration and water treatment

- Leak detection and containment

- Environmental Control Systems:

- Temperature setpoints and tolerances

- Humidity management requirements

- Airflow patterns and management

- Contamination and filtration considerations

- Monitoring and control integration

But here’s an interesting phenomenon: The heat density of modern AI clusters is creating opportunities for heat reuse that were previously impractical. While traditional data centers produced relatively low-grade waste heat (30-40°C), liquid-cooled AI clusters can produce much higher-grade heat (50-65°C) that is suitable for practical applications like district heating, domestic hot water, or absorption cooling. This higher-quality waste heat is transforming cooling from a pure cost center to a potential value generator, with some facilities now selling their waste heat to nearby buildings or industrial processes.

Structural and Space Requirements

Physical considerations for AI cooling infrastructure:

- Floor Loading Capabilities:

- Traditional IT racks: 1,000-2,000 lbs per rack

- Liquid-cooled AI racks: 3,000-5,000 lbs per rack

- Immersion cooling systems: 8,000-15,000 lbs per tank

- Structural reinforcement considerations

- Distributed vs. concentrated loading

- Space Allocation Requirements:

- Equipment footprint considerations

- Service clearance requirements

- Infrastructure support space

- Future expansion accommodation

- Operational workflow optimization

- Physical Infrastructure Integration:

- Piping routes and access

- Structural penetrations and sealing

- Equipment placement optimization

- Maintenance access planning

- Safety and emergency systems

Facility Requirements by Cooling Technology

| Requirement | Air Cooling | Direct Liquid | Immersion | Hybrid |

|---|---|---|---|---|

| Power Density | 10-25 kW/rack | 20-80 kW/rack | 50-150 kW/rack | 15-50 kW/rack |

| Floor Loading | Standard | 2-3x standard | 4-8x standard | 1.5-3x standard |

| Liquid Infrastructure | Minimal | Extensive | Moderate | Moderate |

| Heat Rejection | Standard | 2-4x capacity | 3-6x capacity | 1.5-3x capacity |

| Space Efficiency | Baseline | 2-3x better | 3-5x better | 1.5-2.5x better |

| Retrofit Complexity | Low | High | Very High | Moderate |

| Future Flexibility | Limited | Good | Excellent | Very Good |

Operational and Management Systems

Supporting the ongoing operation of AI cooling:

- Monitoring and Control Requirements:

- Temperature and flow sensing

- Leak detection systems

- Power monitoring integration

- Environmental condition tracking

- Predictive analytics capabilities

- Management System Integration:

- Building management system (BMS) integration

- Data center infrastructure management (DCIM)

- IT system management coordination

- Alerting and notification systems

- Reporting and analytics capabilities

- Operational Support Infrastructure:

- Maintenance facilities and equipment

- Spare parts storage and management

- Testing and validation capabilities

- Training facilities and resources

- Documentation and procedure management

Ready for the fascinating part? The facility requirements for advanced AI cooling are driving a fundamental rethinking of data center design and construction. Some organizations are now developing purpose-built “AI factories” that abandon traditional data center design principles in favor of architectures optimized specifically for liquid-cooled AI infrastructure. These facilities can achieve 3-5x higher computational density per square foot compared to traditional designs, with 30-50% lower construction costs per unit of computing capacity. This architectural evolution represents one of the most significant shifts in data center design since the introduction of raised floors, driven primarily by the unique requirements of AI cooling.

Economic Analysis of Cooling Technologies

The economic implications of cooling technology selection extend far beyond initial capital costs.

Problem: Organizations often focus primarily on initial capital costs when evaluating cooling technologies, missing the broader economic impact.

The true economic impact of cooling technology selection includes operational costs, performance implications, reliability effects, and scaling considerations that are frequently undervalued in decision-making.

Aggravation: The economic equation for cooling is becoming increasingly complex as AI hardware costs, energy prices, and performance requirements evolve.

Further complicating matters, the rapid evolution of AI capabilities and hardware creates a dynamic economic landscape where the optimal cooling approach may change significantly over a system’s lifetime.

Solution: A comprehensive economic analysis that considers all cost and value factors enables more informed cooling technology decisions:

Capital Expenditure Considerations

Understanding the initial investment requirements:

- Direct Hardware Costs:

- Cooling equipment and components

- Installation and commissioning

- Facility modifications and upgrades

- Supporting infrastructure

- Design and engineering services

- Relative Cost Comparison:

- Air cooling: Baseline cost

- Direct liquid cooling: 2-3x air cooling cost

- Immersion cooling: 3-5x air cooling cost

- Hybrid approaches: 1.5-2.5x air cooling cost

- Cost per watt of cooling capacity

- Density and Space Economics:

- Data center space costs ($1,000-3,000 per square foot)

- Rack space utilization efficiency

- Computational density per square foot

- Infrastructure footprint requirements

- Future expansion considerations

Here’s what makes this fascinating: The capital cost premium of advanced cooling technologies decreases significantly with scale. For small deployments (under 100 GPUs), advanced cooling might carry a 3-4x cost premium over air cooling. For large deployments (1000+ GPUs), economies of scale typically reduce this premium to 1.5-2x. This “scale effect” means that the economic equation for cooling technology selection should vary significantly based on deployment size, with larger deployments more easily justifying advanced approaches.

Operational Expenditure Analysis

Evaluating ongoing costs and operational implications:

- Energy Cost Considerations:

- Direct cooling energy consumption

- Impact on IT equipment efficiency

- PUE implications and facility overhead

- Potential for free cooling or heat reuse

- Total energy cost per computation

- Maintenance and Support Costs:

- Preventative maintenance requirements

- Consumables and replacement parts

- Specialized expertise needs

- Vendor support agreements

- Lifecycle management considerations

- Reliability and Availability Impact:

- Mean time between failures (MTBF)

- Mean time to repair (MTTR)

- Downtime cost implications

- Business continuity considerations

- Risk management and mitigation costs

But here’s an interesting phenomenon: The operational cost differential between cooling technologies varies dramatically based on energy costs and utilization patterns. In regions with low electricity costs ($0.05-0.08/kWh), the operational savings of advanced cooling might take 3-5 years to offset the higher capital costs. In high-cost energy regions ($0.20-0.30/kWh), this payback period can shrink to 1-2 years, fundamentally changing the economic equation. This “energy cost multiplier” means that optimal cooling selection should vary significantly based on deployment location and local energy economics.

Performance Economics

Quantifying the value of cooling-enabled performance:

- Thermal Throttling Prevention:

- Performance loss from inadequate cooling (10-30%)

- Computational throughput implications

- Training time and cost impact

- Inference capacity and service level effects

- Value of consistent performance

- Hardware Utilization Efficiency:

- Capital utilization improvement

- Effective cost per computation

- Return on hardware investment

- Depreciation and amortization considerations

- Total cost of ownership impact

- Business Value Considerations:

- Time-to-market advantages

- Research and development velocity

- Service quality and reliability

- Competitive differentiation

- Strategic capability enablement

Economic Impact of Cooling Technology Selection

| Factor | Air Cooling | Direct Liquid | Immersion | Hybrid |

|---|---|---|---|---|

| Initial Capital Cost | $ | $$$ | $$$$ | $$ |

| Energy Cost (3yr) | $$$ | $ | $ | $$ |

| Maintenance Cost | $$ | $$ | $$$ | $$ |

| Performance Impact | -10 to -30% | Baseline | +0 to +10% | -5 to +5% |

| Density Impact | Baseline | 2-3x better | 3-5x better | 1.5-2.5x better |

| Hardware Lifespan | Baseline | +20 to +40% | +30 to +60% | +10 to +30% |

| 3-Year TCO (Small) | Lowest | Moderate | Highest | Low-Moderate |

| 3-Year TCO (Large) | Moderate | Low | Low-Moderate | Lowest |

Total Cost of Ownership Calculation

Comprehensive economic evaluation framework:

- TCO Component Identification:

- Initial capital expenditure

- Installation and commissioning costs

- Energy costs over system lifetime

- Maintenance and support expenses

- Performance and productivity impact

- Hardware lifespan and replacement costs

- Space and infrastructure costs

- Operational staffing requirements

- Scenario-Based Analysis:

- Scale-dependent economics

- Location-specific considerations

- Workload-specific requirements

- Growth and expansion scenarios

- Technology evolution assumptions

- Strategic Value Assessment:

- Competitive advantage considerations

- Risk mitigation benefits

- Future-proofing value

- Organizational capability development

- Strategic alignment evaluation

Ready for the fascinating part? The most sophisticated organizations are implementing “cooling portfolio strategies” rather than standardizing on a single approach. By deploying different cooling technologies for different workloads and deployment scenarios, these organizations optimize both performance and economics across their AI infrastructure. Some have found that a carefully balanced portfolio approach can improve overall price-performance by 20-40% compared to homogeneous deployments, while simultaneously providing greater flexibility to adapt to evolving requirements. This portfolio approach represents a fundamental shift from viewing cooling as a standardized infrastructure component to treating it as a strategic resource that should be optimized for specific use cases.

Future Trends in AI Data Center Cooling

The landscape of data center cooling for AI continues to evolve rapidly, with several emerging trends poised to reshape thermal management approaches.

Problem: Current cooling technologies may struggle to address the thermal challenges of next-generation AI accelerators and deployment models.

As GPU power consumption potentially exceeds 1000W per device and deployment densities continue to increase, even current advanced cooling technologies will face significant challenges.

Aggravation: The pace of innovation in AI hardware is outstripping the evolution of cooling technologies, creating a growing gap between thermal requirements and cooling capabilities.

Further complicating matters, the rapid advancement of AI capabilities is driving accelerated hardware development cycles, creating a situation where cooling technology must evolve more quickly to keep pace with thermal management needs.

Solution: Understanding emerging trends in data center cooling enables more future-proof infrastructure planning and technology selection:

Emerging Cooling Technologies

Innovative approaches expanding cooling capabilities:

- Two-Phase Cooling Advancements:

- Direct-to-chip two-phase cooling

- Flow boiling implementations

- Refrigerant-based systems

- Enhanced phase change materials

- Compact two-phase solutions

- Microfluidic Cooling:

- On-package fluid channels

- 3D-printed cooling structures

- Integrated manifold designs

- Targeted hotspot cooling

- Reduced fluid volume systems

- Solid-State Cooling:

- Thermoelectric cooling applications

- Magnetocaloric cooling research

- Electrocaloric material development

- Solid-state heat pumps

- Hybrid solid-state/liquid approaches

Here’s what makes this fascinating: The cooling technology innovation cycle is accelerating dramatically. Historically, major cooling technology transitions (air to liquid, liquid to immersion) occurred over 7-10 year periods. Current development trajectories suggest the next major transition (potentially to integrated microfluidic or advanced two-phase technologies) may occur within 3-5 years. This compressed innovation cycle is being driven by the economic value of AI computation, which creates unprecedented incentives for solving thermal limitations that constrain AI performance.

Integration and Architectural Trends

Evolving relationships between computing hardware and cooling systems:

- Co-Designed Computing and Cooling:

- Cooling requirements influencing chip design

- Purpose-built cooling for specific accelerators

- Standardized cooling interfaces

- Cooling-aware chip packaging

- Unified thermal-computational optimization

- Disaggregated and Composable Systems:

- Cooling implications of disaggregated architecture

- Liquid cooling for interconnect infrastructure

- Dynamic resource composition considerations

- Cooling for memory-centric architectures

- Heterogeneous system cooling requirements

- Specialized AI Hardware Cooling:

- Neuromorphic computing thermal characteristics

- Photonic computing cooling requirements

- Quantum computing thermal management

- Analog AI accelerator cooling

- In-memory computing thermal considerations

But here’s an interesting phenomenon: The boundary between computing hardware and cooling systems is increasingly blurring. Next-generation designs are exploring “cooling-defined architecture” where thermal management is a primary design constraint rather than an afterthought. Some research systems are even exploring “thermally-aware computing” where workloads dynamically adapt to thermal conditions, creating a bidirectional relationship between computation and cooling that fundamentally changes both hardware design and software execution models.

Sustainability and Efficiency Focus

Environmental considerations increasingly shaping cooling innovation:

- Energy Efficiency Innovations:

- AI-optimized cooling control systems

- Dynamic cooling resource allocation

- Workload scheduling for thermal optimization

- Seasonal and weather-adaptive operation

- Cooling energy recovery techniques

- Heat Reuse Technologies:

- Data center waste heat utilization

- District heating integration

- Industrial process heat applications

- Absorption cooling for facility air conditioning

- Power generation from waste heat

- Water Conservation Approaches:

- Closed-loop cooling designs

- Air-side economization optimization

- Alternative heat rejection methods

- Rainwater harvesting integration

- Wastewater recycling for cooling

Future AI Cooling Technology Outlook

| Technology | Current Status | Potential Impact | Commercialization Timeline | Adoption Drivers |

|---|---|---|---|---|

| Advanced Two-Phase | Early commercial | Very High | 1-3 years | Extreme density, efficiency |

| Microfluidic Cooling | Advanced R&D | Transformative | 3-5 years | Integration, performance |

| Solid-State Cooling | Research | Moderate | 5-7+ years | Reliability, specialized applications |

| AI-Optimized Control | Early commercial | High | 1-2 years | Efficiency, performance stability |

| Heat Reuse Systems | Growing adoption | Moderate-High | 1-3 years | Sustainability, economics |

| Integrated Cooling | Advanced R&D | Very High | 3-5 years | Performance, density, efficiency |

Industry Evolution and Standards

Broader trends reshaping the cooling technology landscape:

- Vendor Ecosystem Development:

- Consolidation among cooling providers

- Computing OEM cooling technology acquisition

- Specialized AI cooling startups

- Strategic partnerships and alliances

- Intellectual property landscape evolution

- Standards and Interoperability:

- Cooling interface standardization efforts

- Performance measurement standardization

- Safety and compliance framework development

- Sustainability certification programs

- Industry consortium initiatives

- Service-Based Models:

- Cooling-as-a-Service offerings

- Performance-based contracting

- Managed cooling services

- Integrated IT/cooling management

- Risk-sharing business models

Ready for the fascinating part? The economic value of cooling innovation is creating unprecedented investment in thermal management technology. Venture capital investment in advanced cooling technologies has increased by 300-400% in the past three years, with particular focus on AI-specific cooling solutions. This investment surge is accelerating the pace of innovation and commercialization, potentially compressing technology adoption cycles that previously took 5-7 years into 2-3 year timeframes. The result is likely to be a period of rapid evolution in cooling technology, creating both opportunities and challenges for organizations deploying AI infrastructure.

Frequently Asked Questions

Q1: How do I determine which cooling technology is most appropriate for my specific AI infrastructure requirements?

Selecting the optimal cooling technology requires a systematic evaluation process: First, assess your thermal requirements—calculate the total heat load based on GPU type, quantity, and utilization patterns, with particular attention to peak power scenarios. For deployments using high-TDP GPUs (400W+) in dense configurations, advanced cooling is typically essential, while more moderate deployments maintain flexibility. Second, evaluate your facility constraints—existing cooling infrastructure, available space, floor loading capacity, and facility water availability may limit your options or require significant modifications for certain technologies. Third, consider your operational model—different cooling technologies require varying levels of expertise, maintenance, and management overhead that must align with your operational capabilities. Fourth, analyze your scaling trajectory—future expansion plans may justify investing in more advanced cooling initially to avoid disruptive upgrades later. Fifth, calculate comprehensive economics—beyond initial capital costs, include energy expenses, maintenance requirements, density benefits, and performance advantages in your analysis. The most effective approach often involves a formal decision matrix that weights these factors according to your specific priorities. Many organizations find that hybrid approaches offer an optimal balance for initial deployments, with targeted liquid cooling for GPUs combined with traditional cooling for other components. This approach delivers most of the performance benefits of advanced cooling with reduced implementation complexity, while providing a pathway to more comprehensive solutions as density increases.

Q2: What are the most important considerations when retrofitting an existing data center for high-density AI cooling?

Retrofitting existing data centers for high-density AI cooling presents several critical challenges: First, assess structural capacity—floor loading limits may be insufficient for liquid cooling infrastructure (3,000-5,000 lbs per rack) or immersion systems (8,000-15,000 lbs per tank), potentially requiring structural reinforcement or strategic placement over support columns. Second, evaluate power infrastructure—existing power distribution may be inadequate for AI densities of 20-80kW per rack, often requiring significant upgrades to PDUs, busways, and upstream electrical systems. Third, analyze mechanical capacity—heat rejection systems designed for 4-8kW per rack may need 5-10x greater capacity for AI workloads, potentially requiring additional chillers, cooling towers, or alternative approaches. Fourth, consider space constraints—advanced cooling often requires additional infrastructure space for pumps, heat exchangers, and distribution systems that may not have been anticipated in the original design. Fifth, plan for operational continuity—retrofitting active data centers requires careful phasing to minimize disruption to existing workloads. The most successful retrofits typically implement a zoned approach, creating dedicated high-density areas with appropriate cooling rather than attempting facility-wide conversion. This targeted strategy allows organizations to optimize investment for specific AI workloads while maintaining existing infrastructure for less demanding applications. For many facilities, hybrid approaches like rear door heat exchangers or targeted liquid cooling offer the best balance of performance improvement and implementation feasibility, providing 60-80% of the benefits of comprehensive solutions with significantly reduced facility impact.

Q3: How does the choice of cooling technology affect the overall reliability and lifespan of GPU hardware?

The choice of cooling technology significantly impacts GPU reliability and lifespan through several mechanisms: First, operating temperature directly affects failure rates—research indicates that every 10°C increase approximately doubles semiconductor failure rates. Advanced cooling technologies that maintain lower operating temperatures can potentially reduce failures by 50-75% compared to borderline cooling. Second, temperature stability matters as much as absolute temperature—thermal cycling creates mechanical stress through expansion and contraction, particularly affecting solder joints, interconnects, and packaging materials. Technologies that maintain more consistent temperatures (typically liquid and immersion) can reduce these stresses by 60-80% compared to air cooling with its more variable thermal profile. Third, temperature gradients across components create differential expansion and localized stress—advanced cooling typically provides more uniform temperatures, reducing these gradients by 40-60%. Fourth, humidity and condensation risks vary by cooling approach—properly implemented liquid cooling with appropriate dew point management can reduce humidity-related risks compared to air cooling in variable environments. The economic implications are substantial—for high-value AI accelerators costing $10,000-40,000 each, extending lifespan from 3 years to 4-5 years through superior cooling can create $3,000-15,000 in value per GPU. Additionally, reduced failure rates directly impact operational costs through lower replacement expenses, decreased downtime, and reduced service requirements. For large deployments, these reliability benefits often exceed the direct energy savings from efficient cooling, fundamentally changing the ROI calculation for cooling investments.

Q4: What are the most common implementation challenges with liquid cooling for AI, and how can they be mitigated?

The most common implementation challenges with liquid cooling for AI, and their mitigation strategies: First, facility integration issues—many existing facilities lack appropriate water infrastructure, requiring significant modifications. This can be mitigated through careful planning, phased implementation, and potentially using CDUs with closed-loop systems that minimize facility impact. Second, operational expertise gaps—many IT teams lack experience with liquid cooling technologies. Address this through comprehensive training programs, detailed documentation, and potentially managed services during the transition period. Third, hardware compatibility concerns—not all servers and components are designed for liquid cooling. Mitigate by working closely with vendors to ensure compatibility, potentially standardizing on liquid-cooling-ready hardware platforms, and implementing thorough testing protocols. Fourth, leak risks and concerns—fear of liquid near electronics remains a significant adoption barrier. Address through high-quality components, proper installation validation, comprehensive leak detection, regular preventative maintenance, and appropriate insurance coverage. Fifth, implementation complexity—liquid cooling involves more components and interdependencies than air cooling. Manage this through detailed project planning, experienced implementation partners, thorough commissioning processes, and comprehensive documentation. Sixth, operational transition challenges—procedures developed for air-cooled environments may not translate directly. Develop new standard operating procedures, emergency response protocols, and maintenance schedules specifically for liquid-cooled infrastructure. Organizations that successfully navigate these challenges typically take a methodical, phased approach that includes pilot deployments, staff training, and gradual expansion, rather than attempting wholesale conversion. This measured strategy allows teams to develop expertise and confidence while minimizing risk to production environments.

Q5: How should organizations plan for the cooling requirements of future GPU generations with potentially higher TDP?

Planning for future GPU cooling requirements requires a forward-looking strategy: First, implement modular and scalable cooling infrastructure—design systems with standardized interfaces and the ability to incrementally upgrade capacity without complete replacement. This approach provides flexibility to adapt as requirements evolve. Second, build in substantial headroom—when designing new infrastructure, plan for at least 1.5-2x current maximum TDP to accommodate future generations. For organizations on aggressive AI adoption paths, 2.5-3x headroom may be appropriate. Third, establish a technology roadmap with clear transition points—develop explicit plans for how cooling will evolve through multiple hardware generations, including trigger points for technology transitions based on density, performance, and efficiency requirements. Fourth, create cooling zones with varying capabilities—designate specific areas for highest-density deployment with premium cooling, allowing targeted infrastructure investment where most needed. Fifth, develop internal expertise proactively—build knowledge and capabilities around advanced cooling technologies before they become critical requirements. The most forward-thinking organizations are implementing “cooling as a service” approaches internally, where cooling is treated as a dynamic, upgradable resource rather than fixed infrastructure. This approach typically involves standardized interfaces between computing hardware and cooling systems, modular components that can be incrementally upgraded, and sophisticated management systems that optimize across multiple cooling technologies. This flexible, service-oriented approach to cooling infrastructure provides the greatest adaptability to the rapidly evolving AI hardware landscape, allowing organizations to incorporate new cooling technologies as they emerge without requiring complete system replacements.