Introduction

With the rapid development of artificial intelligence technology, the energy consumption of data centers has become increasingly prominent, of which the energy consumption of cooling systems accounts for as much as 30-40%. Against the backdrop of climate change and rising energy costs, improving the energy efficiency of AI cooling systems has become the key to the sustainable development of data centers. This article will explore in depth the relationship between AI cooling and energy efficiency, and how data centers can implement sustainable cooling strategies.

1. The impact of AI computing on data center energy consumption

The rapid development of artificial intelligence is significantly changing the energy consumption pattern of data centers, creating unprecedented energy challenges and opportunities.

The problem: AI workloads are driving data center energy consumption exponentially.

Picture this: training a large language model like GPT-4 can consume millions of kilowatt-hours of electricity, equivalent to the electricity consumption of hundreds of US homes in a year. With AI models doubling in size every 3-4 months, this energy demand is climbing fast.

Here’s the kicker: AI computing not only increases overall energy consumption, it also changes the pattern of energy consumption. While server utilization for traditional workloads is typically between 15-30%, AI training clusters can stay at nearly 100% utilization for weeks, generating sustained high heat loads.

Exacerbation: Cooling these high-density AI clusters can consume as much energy as the compute itself.

More worryingly, as single-rack power increases from traditional 5-10kW to 30-50kW or even higher for AI workloads, the efficiency of traditional cooling systems drops dramatically. In some cases, the energy consumption of cooling AI clusters can approach or even exceed the energy consumption of the compute itself.

According to the latest research, global data centers currently consume about 1-2% of global electricity, but with the rapid development of AI, this proportion may increase to 4-5% by 2030, of which cooling energy consumption will be one of the main contributors.

Solution: Improving the energy efficiency of AI cooling systems has become the key to the sustainable development of data centers.

Energy Consumption Characteristics of AI Workloads

AI workloads differ from traditional data center workloads in several key ways that directly impact energy consumption and cooling requirements:

- High Power Density:

- Modern AI accelerators (such as NVIDIA H100, A100, or AMD MI300) have TDPs of up to 400-700 watts

- A single 2U server may contain 8 or more GPUs with a total power of more than 5kW

- Rack density may reach 30-80kW, much higher than traditional 10-15kW racks

- Sustained High Load:

- AI training typically runs GPUs at nearly 100% utilization

- Training sessions may last for days, weeks, or even months

- No low-load periods to allow cooling systems to “rest”

- Concentrated Deployment:

- AI clusters are often concentrated in specific areas of the data center

- Creating a “heat island” effect that challenges overall cooling strategies

- Specialized high-density cooling solutions are needed

Here is a key point: These characteristics of AI workloads not only increase total energy consumption, but also change the temporal and spatial distribution of energy consumption. Traditional data center design assumes that the load distribution is relatively uniform and fluctuates greatly, while AI clusters create continuous high-load hotspots, which requires a completely new cooling approach.

Data Center Energy Consumption Metrics

Understanding several key metrics is critical to assessing and optimizing data center cooling energy efficiency:

- Power Usage Effectiveness (PUE):

- Definition: Total facility energy consumption ÷ IT equipment energy consumption

- Traditional data center: 1.5-2.0

- High-efficiency data center: 1.1-1.3

- Theoretical minimum: 1.0 (all energy used for computing)

- Carbon Usage Effectiveness (CUE):

- Definition: Total carbon emissions ÷ IT equipment energy consumption

- Consider the carbon intensity of energy sources

- Renewable energy use reduces CUE

- Increasing regulatory attention

- Water Usage Efficiency (WUE):

- Definition: Annual water consumption ÷ IT equipment energy consumption

- Traditional cooling tower: 5-8 L/kWh

- High-efficiency system: <1 L/kWh

- Closed-loop system can be close to zero

Comparison of AI data center and traditional data center energy consumption

| Features | Traditional data center | AI data center | Impact |

|---|---|---|---|

| Rack power density | 5-10 kW | 30-80 kW | Increased burden on cooling systems |

| Server utilization | 15-30% | 70-100% | Sustained high heat load |

| PUE | 1.5-2.0 | 1.1-1.4* | Cooling efficiency is more important |

| Cooling as a percentage of total energy consumption | 30-40% | 20-35%* | High potential for cooling optimization |

| Annual growth rate | 5-10% | 25-35% | Intensified sustainability challenges |

*Note: with efficient cooling technology

Environmental impact of AI energy consumption

The rapid growth of AI computing energy consumption raises important environmental concerns:

- Carbon footprint:

- Global data centers emit about 200 million tons of CO₂ per year

- The carbon footprint of AI training may reach hundreds of tons of CO₂

Cooling systems contribute 30-40% of this

- Water consumption:

- Traditional cooling towers can use millions of gallons of water per year

- Water-scarce regions face special challenges

- Climate change exacerbates water stress

- Grid impact:

- Large AI clusters may require 10-100MW of power

- Significant burden on local grids

- Dedicated power infrastructure may be required

But here’s the interesting thing: While AI computing energy consumption is increasing, energy consumption and environmental impact per unit of computing are decreasing. Hardware efficiency improvements, algorithm optimizations, and cooling technology innovations are working together to reduce AI’s environmental footprint. For example, the latest GPUs offer 2-3 times better performance per watt than previous generations, and advanced cooling technology can reduce PUE from 2.0 to nearly 1.1, reducing total energy consumption by 45%.

2. Energy efficiency challenges of traditional cooling methods

Traditional data center cooling methods have shown significant energy efficiency challenges when facing high-density AI workloads, and these limitations are becoming a bottleneck for sustainable AI development.

Problem: The energy efficiency of traditional air cooling systems drops sharply as power density increases.

When planning AI infrastructure, many organizations underestimate the efficiency drop of traditional cooling methods in high-density environments. When rack power exceeds about 15kW, the energy efficiency of traditional air cooling systems begins to deteriorate sharply.

Exacerbation: Cooling system energy consumption can grow faster than computing energy consumption itself.

More worryingly, the fan energy consumption of air cooling systems grows with the cube of airflow demand. This means that when cooling demand doubles, fan energy consumption can increase 8 times. In high-density AI clusters, this nonlinear relationship leads to uncontrolled cooling energy consumption.

Solution: Understand the energy efficiency challenges of traditional cooling methods and prepare for more sustainable alternatives:

Energy efficiency limitations of traditional air cooling systems

Traditional air cooling systems face multiple energy efficiency challenges in high-density environments:

- Exponential growth in fan energy consumption:

- Fan power is proportional to the cube of airflow

- High-density environments require higher airflow

- Server and CRAC fan energy consumption may account for 10-20% of total energy consumption

- High cooling energy consumption:

- Compressors are the main source of energy consumption

- Cooling efficiency decreases as temperature difference increases

- Traditional system COP (coefficient of performance) is typically 2.5-4.0

- Airflow management challenges:

- Hot air recirculation reduces efficiency

- Uneven airflow distribution leads to overcooling

- Bypass airflow wastes cooling capacity

This is where things get interesting: In high-density AI environments, the energy efficiency challenges of traditional cooling systems are not just a cost issue, but also become a practical expansion limitation. For example, a 1MW traditional data center might only support about 100 high-end AI servers, while an equivalent facility with efficient cooling technology might support 200-300, significantly improving computing density and overall efficiency.

Energy consumption of cooling system

In traditional data centers, cooling system is the main energy consumption source:

- Compressor energy consumption:

- Accounts for 50-60% of total cooling energy consumption

- Efficiency is affected by the temperature difference between condenser and evaporator

- Partial load efficiency is usually low

- Cooling tower/condenser:

- Accounts for 10-20% of total cooling energy consumption

- Pump and fan energy consumption is significant

- Water treatment and maintenance increase operating costs

- Cold water/chilled water system:

- Pump energy consumption accounts for 15-25% of total cooling energy consumption

- Pipe and valve pressure loss increases energy consumption

- Low temperature difference design increases pumping energy consumption

Distribution of energy consumption of traditional data center cooling system

| Component | Energy consumption ratio | Efficiency challenge | Problems in AI environment |

|---|---|---|---|

| Compressor | 50-60% | Low efficiency at partial load | Continuous high load, few optimization opportunities |

| CRAC/CRAH fans | 15-25% | Fan law (cubic relationship) | High airflow demand leads to a surge in energy consumption |

| Cooling tower/condenser | 10-20% | Ambient temperature dependence | Concentrated heat discharge affects efficiency |

| Chilled water/chilled water pump | 15-25% | Flow and pressure loss | High density requires higher flow |

| Server fans | 5-10% | High internal thermal resistance | High-power chips require high speed |

Relationship between temperature set point and energy efficiency

Data center temperature set point has a significant impact on energy efficiency, but traditional practices are often too conservative:

- The impact of conservative temperature settings:

- Traditional set point: 18-20°C supply air temperature

- Every 1°C increase in supply air temperature can reduce cooling energy consumption by about 2-4%

- Too low temperature leads to unnecessary energy waste

- ASHRAE recommended range:

- Recommended range: 18-27°C

- Allowable range: 15-32°C

- Modern IT equipment can safely operate at higher temperatures

- Temperature considerations for AI hardware:

- GPUs are typically designed to operate in 0-35°C environments

- Temperature affects performance and reliability

- Need to balance energy efficiency and computing performance

But here’s an interesting phenomenon: Although raising the temperature set point can save energy, this strategy may be counterproductive for AI workloads. High-performance GPUs may trigger thermal throttling earlier at higher ambient temperatures, reducing computing performance. Research shows that for AI clusters, the best energy efficiency may be achieved at lower temperature set points, as the increase in computing performance outweighs the increase in cooling energy consumption. This “performance-first” cooling strategy requires more efficient cooling technology rather than simply increasing the temperature set point.

Carbon Footprint of Traditional Cooling Methods

Traditional cooling methods are not only energy inefficient, but can also have a significant carbon footprint:

- Direct emissions:

- Refrigerant leaks (high global warming potential)

- Backup generator testing and use

- On-site fuel use

- Indirect emissions:

- Carbon emissions associated with electricity consumption

- Water treatment and transportation

- Equipment manufacturing and replacement

- Life cycle impacts:

- Carbon footprint of equipment manufacturing

- High maintenance and replacement frequency

- Waste disposal challenges

Ready for the exciting part? The carbon footprint of traditional cooling methods comes not only from energy consumption, but also from the impact of refrigerants. Commonly used HCFC and HFC refrigerants can have a global warming potential (GWP) thousands of times that of CO₂. A refrigerant leak in a large data center can be equivalent to the annual emissions of hundreds of cars. This makes switching to low-GWP refrigerants and cooling technologies that reduce refrigerant use (such as free cooling and liquid cooling) a key strategy for reducing carbon footprint.

3. High-energy-efficiency cooling technologies and solutions

Faced with the energy efficiency challenges of traditional cooling methods, data centers are adopting a range of high-energy-efficiency cooling technologies, and these innovative solutions are revolutionizing the energy footprint of AI infrastructure.

Problem: Breakthrough cooling technologies are needed to improve the energy efficiency of AI data centers.

With the explosive growth of AI computing needs, simply improving existing cooling methods is no longer sufficient to meet energy efficiency challenges. The industry needs innovative cooling technologies that can significantly improve energy efficiency.

Aggravation: Different regions and application scenarios have different needs, and there is no single “best” cooling solution.

Even more challenging is that the choice of cooling technology is affected by many factors, including climate conditions, water availability, energy costs, space constraints, and initial investment capabilities. This requires a flexible and diverse combination of solutions.

Solution: Explore several categories of cooling technologies that have been shown to significantly improve energy efficiency:

Free Cooling Technologies

Free cooling (also known as “free cooling”) uses ambient conditions to provide low-energy cooling:

- Air-side Free Cooling:

- Directly uses cold outside air to cool the data center

- Suitable for cold and temperate climates

- Can reduce cooling energy consumption by 50-90%

- Simple implementation and fast return on investment

- Water-side Free Cooling:

- Utilizes cooling towers or dry coolers instead of compressors

- Applicable to a wider range than air-side Free Cooling

- Can reduce cooling energy consumption by 30-70%

- Requires a larger initial investment

- Hybrid Free Cooling:

- Combines air-side and water-side Free Cooling

- Automatically switches modes based on ambient conditions

- Maximizes free cooling time throughout the year

- Provides the best balance of energy efficiency and reliability

This is where things get interesting: the benefits of free cooling are highly dependent on geographic location and climate conditions. For example, in the Nordic region, data centers may use free cooling for more than 90% of the year, reducing PUE to close to 1.1; while in tropical regions, this proportion may be less than 20%. This difference is driving some large AI providers to migrate their compute-intensive workloads to regions with more favorable climates, creating new “data center paradises”.

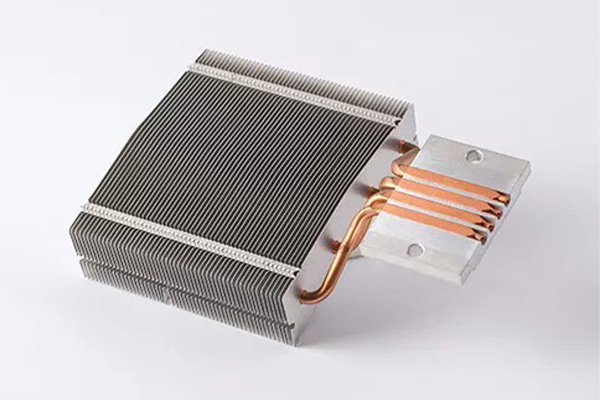

Energy efficiency advantages of liquid cooling technology

Liquid cooling technology provides significant energy efficiency advantages, especially for high-density AI workloads:

- Direct liquid cooling (cold plate):

- Reduce cooling energy consumption by 30-50%

- Eliminate server fans and save 5-15% of energy consumption

- Support higher water supply temperature and increase free cooling opportunities

- PUE can be reduced to 1.1-1.3

- Immersion cooling:

- Reduce cooling energy consumption by 45-60%

- Completely eliminate server fans

- High temperature cooling water (>40°C) can be used to maximize free cooling

- PUE can be close to 1.03-1.1

- Two-phase immersion cooling:

- Highest energy efficiency, reducing cooling energy consumption by 50-65%

- Improve heat transfer efficiency by using phase change process

- Excellent temperature uniformity and reduced hot spots

- Suitable for extremely high-density deployments

Energy efficiency comparison of different cooling technologies

| Cooling Technology | Typical PUE | Cooling Energy Reduction | Applicable Density | Water Use | Initial Cost |

|---|---|---|---|---|---|

| Traditional Air Cooling | 1.6-2.0 | Baseline | Low-Medium | High | Low |

| Air-Side Free Cooling | 1.2-1.5 | 30-60% | Low-Medium | Low | Low-Medium |

| Water-Side Free Cooling | 1.15-1.4 | 40-70% | Medium | Medium-High | Medium |

| Direct Liquid Cooling | 1.1-1.3 | 50-70% | High | Low-Medium | Medium-High |

| Single-Phase Immersion Cooling | 1.05-1.15 | 60-80% | Very High | Low | High |

| Dual Phase Immersion Cooling | 1.03-1.1 | 65-85% | Very High | Very Low | Very High |

Intelligent Cooling Management System

Intelligent cooling management systems use data analysis and automation to improve energy efficiency:

- AI-driven cooling optimization:

- Machine learning algorithms predict cooling needs

- Adjust cooling parameters in real time

- Can reduce cooling energy consumption by 10-30%

- Adapt to dynamic workload changes

- Digital Twin Technology:

- Create a detailed thermal model of the data center

- Simulate the effects of different cooling strategies

- Identify hot spots and inefficient areas

- Optimize airflow and temperature distribution

- Precision Cooling Control:

- Load-based variable speed fan control

- Dynamic temperature set point adjustment

- Cooling resources are allocated on demand

- Reduce overcooling and energy waste

But here’s the interesting thing: the value of intelligent cooling systems grows exponentially as the size and complexity of data centers increase. In small facilities, simple control strategies may be sufficient; but in large AI clusters, intelligent systems may identify complex optimization opportunities that are difficult for human operators to discover. For example, a leading cloud provider reported a 28% reduction in cooling energy consumption while improving temperature stability after implementing AI-driven cooling optimization in its large AI data center.

Modular and Scalable Cooling Design

Modular and Scalable Cooling Design provides a unique combination of energy efficiency and flexibility:

- Modular Cooling Architecture:

- Deploy cooling resources on demand

- Avoid over-provisioning and inefficient operation

- Support phased expansion

- Optimize partial load efficiency

- Row-level and rack-level cooling:

- Place cooling close to the heat source

- Reduce distribution losses

- Improve cooling accuracy

- Support high-density and low-density mixed deployments

- Scalable Liquid Cooling Infrastructure:

- Support the evolution from partial liquid cooling to full liquid cooling

- Compatible with different types of liquid cooling technologies

- Reduce initial investment barriers

- Provide a technology upgrade path

Ready for the exciting part? A key advantage of modular cooling designs is their ability to adapt to the rapid changes and unpredictability of AI workloads. Traditional centralized cooling systems are typically designed for peak loads, resulting in inefficient partial load conditions that are run most of the time. In contrast, modular systems can enable or disable cooling modules based on actual demand, maintaining efficient operation under a variety of load conditions. This approach can improve partial load efficiency by 30-50%, significantly reducing long-term energy consumption.

4. Heat recovery: turning waste heat into a resource

Waste heat generated by data centers is often seen as a problem that needs to be dealt with, but innovative heat recovery systems are turning this “waste” into a valuable resource, further improving energy efficiency.

Problem: Traditional data centers discharge a large amount of energy into the environment in the form of waste heat, causing energy waste.

A typical 1MW data center generates about 8,760 megawatt-hours of heat per year, most of which is simply discharged into the atmosphere. This not only represents a huge waste of energy, but also increases the environmental heat burden.

Exacerbated: As the scale of AI computing grows, the problem of waste heat emissions will become more serious.

More worryingly, global data center capacity is expected to grow 2-3 times in the next 5 years, with AI workloads growing the fastest. If heat recovery measures are not taken, this will lead to a significant increase in waste heat emissions.

Solution: Implement a heat recovery system to convert data center waste heat into a useful energy resource:

Data Center Waste Heat Characteristics

Understanding the characteristics of data center waste heat is critical to designing an effective recovery system:

- Temperature Class:

- Air-cooled systems: typically produce 30-45°C waste heat

- Direct liquid cooling: typically produce 45-60°C waste heat

- Immersion cooling: can produce 50-70°C waste heat

- Application potential increases with temperature

- Heat Availability:

- AI clusters produce consistent and stable heat

- Traditional workloads have large heat fluctuations

- Relatively small seasonal variations

- Geographically concentrated for easy recovery

- Thermal Mass Considerations:

- Thermal Enthalpy (heat content per unit mass)

- Heat flow (heat per unit time)

- Temperature stability and predictability

- Time matching with demand

This is where things get interesting: liquid-cooled systems are not only more energy efficient, but also produce higher quality (higher temperature) waste heat, significantly increasing the recovery value. For example, waste heat at 40°C has limited applications, while waste heat at 60°C can be used for district heating, greenhouse heating, and certain industrial processes. This gives liquid cooling technology a dual advantage in terms of energy efficiency and heat recovery.

Application scenarios for heat recovery

Data center waste heat can be used for a variety of applications, creating economic and environmental value:

- Building heating:

- District heating networks

- Office space and residential heating

- Hot water supply

- Swimming pool heating

- Agricultural applications:

- Greenhouse heating

- Aquaculture

- Food drying

- Vertical farms

- Industrial processes:

- Preheating process water

- Low temperature drying processes

- Absorption refrigeration

- Desalination and water treatment

Comparison of data center waste heat recovery applications

| Application | Required temperature | Applicable cooling technology | Economic value | Implementation complexity | Successful cases |

|---|---|---|---|---|---|

| District heating | 60-90°C | High temperature liquid cooling | High | High | Stockholm data center |

| Office heating | 40-60°C | Liquid cooling, immersion | Medium-high | Medium | Facebook Odense |

| Greenhouse heating | 30-50°C | Most systems | Medium | Low-medium | EcoDataCenter |

| Aquaculture | 20-35°C | Almost all systems | Low-medium | Low | Hima Data Center, Norway |

| Absorption cooling | 70-90°C | High-temperature liquid cooling | High | High | IBM Aquasar |

Heat recovery system design

Effective heat recovery systems require careful design to maximize energy value:

- Heat exchange system:

- High-efficiency heat exchangers minimize temperature losses

- Split cycles prevent contamination

- Variable-speed pumps optimize energy consumption

- Redundant design ensures reliability

- Temperature boosting technology:

- Heat pumps raise waste heat temperature

- Cascade systems maximize utilization

- Heat storage balances supply and demand

- Auxiliary heating systems supplement demand

- Integration and Control:

- Intelligent control systems balance cooling and recovery

- Demand response optimization

- Seasonal policy adjustments

- Integration with building management systems

But here’s the interesting thing: the design of the heat recovery system must balance data center cooling needs and thermal energy utilization. In some cases, some cooling efficiency may need to be sacrificed to increase the temperature and value of waste heat. For example, increasing the return water temperature of a liquid cooling system from the typical 25°C to 35°C may slightly reduce cooling efficiency but significantly increase the value of waste heat utilization. This trade-off needs to be evaluated based on the economic and environmental factors of the specific project.

Economic and Environmental Benefits of Heat Recovery

Heat recovery not only has environmental benefits, it can also create significant economic value:

- Economic Benefits:

- Reduced energy purchase costs

- Create new revenue streams

- Reduced carbon taxes and compliance costs

- Improved overall energy efficiency

- Environmental Benefits:

- Reduced carbon emissions

- Reduced reliance on fossil fuels

- Reduced thermal pollution

- Improved resource efficiency

- Community Benefits:

- Provide low-cost heat to the local area

- Create green jobs

- Improved energy security

- Promote the development of a circular economy

Ready for the exciting part? The economic value of heat recovery is rapidly increasing, driven by three main factors: rising energy costs, the expansion of carbon pricing mechanisms, and technological advances. In regions with high energy costs such as Europe, a heat recovery system for a large data center may create millions of euros per year, with a payback period of 3-5 years. This has transformed heat recovery from a “green marketing” to a substantial economic advantage, driving a rapid increase in industry adoption.

5. Future Trends in Sustainable Cooling

Data center cooling technology is in a rapid development phase, and several innovations and trends will emerge in the next few years to further improve energy efficiency and sustainability.

Problem: Although current cooling technology has improved, it still needs to continue to innovate to meet the needs of future AI computing.

As the scale and complexity of AI models continue to grow, the performance and power consumption of computing hardware will continue to increase. It is expected that the power of a single rack may reach 100-200kW in the next few years, which will require more advanced cooling technology.

Intensification: Climate change and resource constraints will further drive the need for sustainable cooling solutions.

More worryingly, global climate change is increasing energy and water pressures, while the regulatory environment is also becoming stricter. These factors together drive data centers to seek more sustainable cooling methods.

Solution: Explore the future development trend of sustainable cooling in data centers and provide guidance for long-term planning:

Next-generation cooling technology

Several breakthrough cooling technologies are moving from the laboratory to commercial applications:

- Chip-level liquid cooling:

- Integrate cooling channels directly into chip packages

- Reduce thermal interface materials and thermal resistance

- May support 2-3 times the power density

- Expected to be commercialized in 3-5 years

- Supercritical CO₂ cooling:

- Utilizes the unique thermodynamic properties of CO₂

- 30-40% more energy efficient than traditional refrigerants

- Global warming potential (GWP) is 1, much lower than traditional refrigerants

- Being tested in multiple pilot projects

- Solid-state cooling technology:

- Thermoelectric cooling does not require refrigerants or compressors

- Precise temperature control, eliminating temperature fluctuations

- High reliability, no moving parts

- Currently used in small applications, expanding to larger systems

This is where things get interesting: these new technologies not only improve energy efficiency, but also have the potential to completely change the way data centers are designed and operated. For example, chip-level liquid cooling could blur the lines between computing and cooling systems, creating highly integrated systems where cooling is no longer a separate subsystem but a core component of chip design.

Renewable Energy and Cooling Integration

Integration of cooling systems with renewable energy is becoming a key strategy for improving sustainability:

- Dynamic Cooling Load Management:

- Adjust cooling strategy based on renewable energy availability

- Leverage thermal storage as a “battery”

- Participate in demand response programs

- Optimize energy costs and carbon footprint

- Onsite Renewable Energy:

- Solar Direct Drive Cooling System

- Geothermal Energy for Free Cooling

- Wind Energy and Cooling Tower Integration

- Reduce Grid Dependence and Transmission Losses

- Energy Ecosystem Integration:

- Data Center as Energy Hub

- Integration with Microgrids and Community Energy Systems

- Provide Grid Services and Flexibility

- Create Multiple Value Streams

Comparison of Future Cooling Technology Trends

| Technology Trends | Energy Efficiency Improvement Potential | Expected Commercialization Time | Sustainability Impact | Main Challenges |

|---|---|---|---|---|

| Chip-Level Liquid Cooling | 30-50% | 3-5 years | High | Standardization, cost |

| Supercritical CO₂ cooling | 20-40% | 2-4 years | Very high | Safety, system complexity |

| Solid-state cooling | 10-30% | 5-8 years | High | Scale, efficiency |

| AI-optimized cooling | 15-25% | Commercially available, ongoing development | Medium-high | Data quality, integration |

| Waste heat utilization network | Indirect improvement of overall efficiency | Regionally developing | Very high | Infrastructure, coordination |

Circular economy and resource efficiency

Circular economy principles are changing the design and operation of data center cooling systems:

- Water recycling:

- Closed-loop water systems minimize consumption

- Rainwater collection and greywater reuse

- Advanced water treatment to extend service life

- Zero liquid discharge (ZLD) technology

- Material sustainability:

- Low environmental impact refrigerants

- Recyclable and biodegradable materials

Extended equipment life cycle

- Modular design facilitates upgrades rather than replacements

- Full life cycle approach:

- Consider environmental impacts from the design stage

- Supply chain sustainability requirements

- Equipment reuse and remanufacturing

- Waste management and recycling

But here’s an interesting phenomenon: the circular economy approach is not only environmentally friendly, but may also create economic value. For example, some data center operators have found that by extending the life of cooling equipment, reusing components and optimizing maintenance, the total cost of ownership (TCO) can be reduced by 15-25%. At the same time, although the initial investment of water recycling systems is higher, they may provide significant long-term cost savings and risk mitigation in water-scarce areas.

Impact of policies and standards

Policies, regulations and industry standards are shaping the future of data center cooling:

- Carbon pricing mechanisms:

- Carbon taxes and expansion of emissions trading systems

- Raising the cost of high-carbon cooling methods

- Creating economic incentives for low-carbon technologies

- Driving innovation and investment

- Energy efficiency regulations:

- Tightening minimum energy efficiency standards

- Mandatory energy audits and reporting

- Performance-based building regulations

- Tax incentives and subsidy programs

- Industry standards and certifications:

- Green data center certification expansion

- Standardization of cooling technology performance

- Environmental impact disclosure requirements

- Sustainable procurement standards

Ready for the exciting part? The pace of change in the policy environment is accelerating and may become a key driver of cooling technology adoption. For example, the EU’s “Climate Neutrality for Data Centers” requires climate neutrality by 2030, which directly promotes the rapid adoption of efficient cooling technologies and heat recovery. At the same time, data center water use restrictions are being implemented or considered in multiple regions around the world, which will accelerate the development of dry cooling and closed-loop systems. This policy-driven shift could create a faster technology adoption curve than pure market forces.

Frequently Asked Questions

Q1: How is the cooling energy consumption of AI data centers different from that of traditional data centers?

The cooling energy consumption of AI data centers is significantly different from that of traditional data centers: First, the power density is very different. The rack power of AI clusters is usually 30-80kW, while that of traditional data centers is 5-10kW, which requires a more powerful cooling system; second, the load characteristics are different. AI training usually runs for weeks at a utilization rate close to 100%, generating a continuous high heat load, while traditional workloads fluctuate greatly; third, hot spots are more concentrated. AI accelerators generate extremely high hot spot densities, with power densities of up to 500W/cm² in some areas; finally, temperature sensitivity is higher. AI workloads are particularly sensitive to temperature fluctuations, and thermal throttling directly affects training performance and results. These differences cause traditional cooling methods to become drastically less efficient in AI environments, fan energy consumption may increase by 4-8 times, and overall PUE may rise from 1.5-2.0 to 2.0-2.5 unless advanced cooling technology is used. Research shows that in high-density AI environments, liquid cooling technology can reduce cooling energy consumption by 50-70% while providing a more stable temperature environment, making it the preferred solution for AI data centers despite the higher initial investment.

Q2: How does liquid cooling technology improve the energy efficiency of data centers?

Liquid cooling technology significantly improves data center energy efficiency through multiple mechanisms: First, heat transfer efficiency is higher. The heat capacity of liquid is about 3500-4000 times that of air, enabling liquid cooling systems to remove heat more effectively and reduce energy consumption; second, eliminate or reduce fan use. Server and CRAC fans may consume 15-25% of total energy consumption in traditional data centers. Liquid cooling systems significantly reduce or completely eliminate this part of energy consumption; third, improve cooling efficiency. Liquid cooling systems can use higher cooling liquid temperatures (usually 45-60°C instead of the traditional 25-35°C), significantly improving the efficiency of the cooling system and increasing natural cooling opportunities; fourth, reduce distribution losses, bring cooling directly to the heat source, and reduce energy losses during the distribution process; finally, provide higher quality waste heat for easy recycling, further improving overall energy efficiency. Actual deployment data shows that direct liquid cooling can reduce PUE from 1.6-2.0 to 1.1-1.3, reducing total energy consumption by 30-50%, while immersion cooling can achieve a PUE close to 1.03-1.1, reducing energy consumption by 45-60%. For a 1MW AI data center, this could mean saving hundreds of thousands of dollars in energy costs and thousands of tons of carbon emissions each year.

Q3: What are the main applications and economic benefits of data center waste heat recovery?

Data center waste heat recovery has a variety of applications and significant economic benefits: the main applications include building heating (district heating networks, office space and residential heating, hot water supply), which can utilize 40-90°C waste heat; agricultural applications (greenhouse heating, aquaculture, food drying), which usually require 30-50°C waste heat; industrial processes (preheating process water, low-temperature drying, absorption refrigeration), which usually require 50-90°C waste heat. In terms of economic benefits, direct savings include reduced energy purchase costs. A heat recovery system in a large data center may save hundreds of thousands to millions of dollars in heating costs each year; create new revenue streams by selling heat to third parties; reduce carbon taxes and compliance costs, and this value will increase as carbon pricing mechanisms expand; improve marketing value and brand image, and meet customer and investor expectations for sustainability. The payback period varies by application and region. It may be 3-5 years in Europe, where energy costs are high, and 5-8 years in regions with lower energy costs. It is worth noting that the higher temperature waste heat (45-70°C) generated by liquid cooling systems has higher economic value and can be used in a wider range of applications, which is another factor driving the adoption of liquid cooling technology.

Q4: How to evaluate and select cooling technology suitable for a specific AI data center?

Evaluating and selecting cooling technology suitable for a specific AI data center requires consideration of multiple factors: first, technical factors, including power density requirements (low density <10kW/rack can consider high-efficiency air cooling, medium density 10-30kW is suitable for hybrid or row-level cooling, and high density >30kW usually requires liquid cooling), expansion plans (expected growth rate and final scale), existing infrastructure compatibility, and hardware compatibility (not all servers support all cooling technologies); second, environmental factors, including climatic conditions (affecting the potential for free cooling), water resource availability (affecting the feasibility of water cooling technology), energy sources and costs (affecting the economics of technology selection), and local regulations and licensing requirements; third, economic factors, including initial capital expenditures, operating costs (energy, water, maintenance), total cost of ownership (TCO) analysis, and return on investment expectations; finally, operational factors, including team skills and experience, reliability and redundancy requirements, maintenance complexity, and future technology adaptability. The best practice is to adopt a phased approach: start with a small-scale pilot to verify the performance of the technology in a real environment; use computational fluid dynamics (CFD) simulation to evaluate different options; consider a hybrid approach to use different cooling technologies for different regions and workloads; and finally, evaluate the full life cycle cost and environmental impact rather than just focusing on the initial investment.

Q5: What are the sustainability trends for data center cooling in the next five years?

The sustainability trends for data center cooling in the next five years include: In terms of technological innovation, chip-level liquid cooling will directly integrate the cooling channel into the chip package, which is expected to be commercialized in 3-5 years and may support 2-3 times the power density; supercritical CO₂ cooling utilizes the unique thermodynamic properties of CO₂, which is 30-40% more energy efficient than traditional refrigerants and has extremely low global warming potential; solid-state cooling technology does not require refrigerants or compressors, providing precise temperature control and high reliability. In terms of integration trends, dynamic cooling load management will adjust cooling strategies based on renewable energy availability to optimize energy costs and carbon footprints; on-site renewable energy directly drives the cooling system to reduce grid dependence; data centers will be integrated with microgrids and community energy systems as energy hubs. In terms of resource efficiency, closed-loop water systems will minimize water consumption, with rainwater collection and greywater reuse becoming standard; the use of low-impact refrigerants and recyclable materials will increase; and a full life cycle approach will consider environmental impacts from the design stage. In terms of policy impact, the expansion of carbon pricing mechanisms will increase the cost of high-carbon cooling methods; stricter energy efficiency regulations will set minimum standards; and industry certification will promote transparency and best practices. Together, these trends point to a more sustainable future, with leading data centers expected to have a PUE of close to 1.1, water use efficiency close to zero, more than 90% of waste heat recycled, and carbon footprints reduced by 70-80% by 2030, primarily through a combination of cooling innovations, renewable energy, and circular economy practices.