Introduction

With the rapid development of artificial intelligence technology, thermal management of AI hardware has become a key determinant of system performance, reliability, and energy efficiency. From single GPU workstations to large-scale data centers, an effective thermal management strategy is essential to fully realize the potential of AI hardware. This article will provide a comprehensive thermal management best practice guide from design to deployment to help you optimize AI system performance and extend hardware life.

1. Uniqueness of AI Hardware Thermal Challenges

The thermal challenges faced by AI hardware are significantly different from those of traditional computing hardware. Understanding these unique features is the basis for developing an effective thermal strategy.

The problem: AI accelerators generate heat with fundamentally different characteristics than traditional CPUs and GPUs.

Imagine this scenario: a standard enterprise server CPU might have a TDP of 150-250 watts, while a single AI accelerator like the NVIDIA H100 might be as high as 700 watts, with future models expected to break 1,000 watts. This surge in power density creates unprecedented thermal challenges.

Here’s the kicker: Not only is the total heat generated by AI accelerators higher, the heat distribution is also more uneven. Hotspot densities in certain areas can be as high as 500W/cm², 5-10 times that of traditional CPUs, and these concentrated hotspots can cause localized temperature spikes even when overall temperatures appear manageable.

Exacerbating: The characteristics of AI workloads further exacerbate the thermal challenge.

More concerning, AI training workloads often run continuously for days or weeks at near 100% utilization, with few low-load periods to give the cooling system a break. This is in stark contrast to the volatility of traditional workloads.

According to the latest research, during high-intensity AI training, if the heat dissipation is insufficient, the accelerator may reach the thermal throttling threshold within a few minutes, resulting in a 15-30% drop in performance, which directly affects the training speed and efficiency.

Solution: Understand the unique challenges of AI hardware heat dissipation to lay the foundation for developing an effective heat dissipation strategy.

Thermal characteristics of AI accelerators

A deep understanding of the thermal characteristics of AI accelerators is crucial for designing effective cooling systems:

- High thermal density:

- Modern AI accelerators (GPU/TPU/ASIC) have a TDP range of 300-700 watts

- Chip area is typically 600-900mm²

- Average thermal density can reach 0.8-1.0 W/mm²

- Hot spots may be as high as 2-3 W/mm²

- Uneven thermal distribution:

- The compute unit area has the highest thermal density

- The memory interface area has a medium thermal density

- The control logic area has a low thermal density

- The temperature difference inside the chip may reach 15-25°C

- Dynamic thermal behavior:

- Thermal output during training is close to the TDP upper limit

- Thermal output fluctuates greatly during inference

- Batch size affects thermal output pattern

- Different model architectures produce different thermal characteristics

Here is a key point: the thermal characteristics of AI accelerators are not only quantitative changes, but also qualitative differences. Traditional CPU cooling design assumes relatively uniform heat distribution and periodic load fluctuations, while AI accelerators need to deal with extreme hot spots and continuous high loads. This requires a fundamental rethinking of cooling strategies.

Thermal Impact of AI Workloads

The characteristics of AI workloads directly affect cooling requirements and strategies:

- Sustained High Load:

- Large model training can last for weeks

- GPU utilization is often maintained at 90-100%

- There is almost no natural “cooling down period”

- Cooling systems must be designed to run at full load for a long time

- Batch Size Impact:

- Larger batch sizes generally produce higher thermal output

- Smaller batches may cause more frequent thermal fluctuations

- Mixed precision training affects power consumption and thermal output

- Optimizing batch size can balance performance and thermal management

- Model Architecture Differences:

- Convolutional Networks (CNN) generally produce more uniform thermal distribution

- Transformer models (Transformer) may produce more concentrated hot spots

- Recurrent Networks (RNN) thermal characteristics change over time

- Hybrid architectures create complex thermal patterns

AI Workload Types and Cooling Requirements

| Workload Type | Thermal Output Characteristics | Duration | Cooling Challenges | Recommended Cooling Methods |

|---|---|---|---|---|

| Large model training | Close to TDP upper limit, stable | Days to weeks | Long-term high thermal load | Liquid cooling, immersion cooling |

| Small model training | 80-95% TDP, relatively stable | Hours to days | Medium thermal load | High-efficiency air cooling, direct liquid cooling |

| Batch inference | 70-90% TDP, periodic | Continuous operation, load fluctuation | Thermal cycling, fluctuation management | Hybrid cooling, phase change materials |

| Real-time inference | 40-70% TDP, high fluctuation | Continuous operation, unstable load | Rapid thermal changes | High-response air cooling, thermal buffering |

Impact of temperature on AI performance

The impact of temperature on AI hardware performance is more significant than traditional computing:

- Thermal throttling mechanism:

- Modern AI accelerators automatically reduce clock speed when the temperature threshold is reached

- Typical throttling threshold is 85-95°C

- Throttling may cause a 15-30% drop in performance

- Seriously affects training speed and consistency

- Accuracy and stability impact:

- High temperatures may increase computational error rates

- Temperature fluctuations affect training convergence

- Some algorithms are particularly sensitive to temperature changes

- May lead to non-reproducible results

- Long-term reliability considerations:

- Continuous high temperatures accelerate component aging

- Thermal cycling increases physical stress

- Impacts interconnect and solder joint reliability

- May shorten hardware life by 30-50%

But here’s an interesting phenomenon: temperature not only affects hardware performance, it may also affect the AI model itself. Studies have shown that temperature fluctuations during training can cause training instability, affecting model convergence and final accuracy. For example, one study found that models trained in a temperature-unstable environment may have a final test accuracy that is 1-2 percentage points lower, which can be a significant difference in highly competitive fields. This makes temperature stability a key consideration in AI system design.

2. System-level thermal design principles

Effective AI hardware thermal management requires a system-level perspective, considering every link in the heat flow path, rather than focusing only on individual components.

Problem: Optimizing the heat dissipation of individual components in isolation does not solve the system-level thermal challenge.

When planning AI systems, many organizations make the mistake of focusing only on the heat dissipation of the accelerator itself, ignoring other heat sources and heat flow paths in the system. This one-sided approach often leads to suboptimal thermal performance.

Aggravation: As AI system density increases, thermal interactions between components become more complex.

More worryingly, as more accelerators and auxiliary components are integrated into a more compact space, thermal interactions and heat accumulation issues become more serious. The heat output of one component can significantly affect the temperature of nearby components.

Solution: Adopt a system-level thermal design approach, considering the entire heat flow path and all heat sources:

Heat flow path analysis

Understanding and optimizing the entire heat flow path is the basis of system-level thermal design:

- Heat flow path components:

- Heat sources (chips, memory, power supplies, etc.)

- Thermal interface materials (TIM)

- Heat sinks/cold plates

- Heat transfer media (air, liquid)

- Heat exchangers

- Ambient heat dissipation

- Thermal resistance analysis:

- Identify the main thermal resistance in the thermal path

- Quantify the thermal resistance contribution of each link

- Prioritize solving the largest thermal resistance link

- Balance the thermal performance and cost of each link

- System-level thermal simulation:

- Computational fluid dynamics (CFD) simulation

- Thermal network analysis

- Transient thermal response evaluation

- Extreme condition testing

This is where things get interesting: In high-performance AI systems, thermal interface materials (TIMs) are often the main thermal resistance in the entire thermal path, even though they are only tens to hundreds of microns thick. The thermal conductivity of conventional silicone grease is only 5-10 W/m·K, while the thermal conductivity of the chip and heat sink can be as high as hundreds of W/m·K. This makes TIM a key point in system optimization. For example, replacing standard silicone grease with liquid metal TIM can reduce the interface thermal resistance by 70-80% and reduce the chip temperature by 5-15°C, even if other components remain unchanged.

Airflow Management Principles

For air-cooled systems, effective airflow management is the key to optimizing thermal performance:

- Airflow path design:

- Minimize airflow resistance

- Avoid short circuits and backflow

- Ensure uniform airflow distribution

- Eliminate dead zones and vortices

- Pressure management:

- Maintain appropriate positive or negative pressure

- Balance intake and exhaust

- Consider fan characteristic curves

- Optimize fan placement and direction

- Thermal isolation strategy:

- Separate hot and cold aisles

- Physical partitions and guide plates

- Temperature zones

- Prevent hot air recirculation

Best Practices for Airflow Management

| Strategy | Implementation Method | Effect | Applicable Scenarios |

|---|---|---|---|

| Isolate hot and cold aisles | Physical partitions, close cold aisles | Reduce temperature by 5-10°C | Rack-level deployment |

| Guide plate installation | Customized guide plates to optimize airflow paths | Eliminate hot spots and even out temperatures | Inside the server |

| Intake and exhaust optimization | Front intake and rear exhaust, top auxiliary exhaust | Reduce hot air recirculation | Cabinet design |

| Fan speed control | Dynamic adjustment based on temperature | Balance noise and cooling | All air cooling systems |

| Sealing management | Block unused spaces to prevent airflow short-circuiting | Improve cooling efficiency by 10-20% | Racks and cabinets |

Liquid Cooling System Design Principles

Liquid cooling systems provide higher heat dissipation efficiency, but they need to follow specific design principles:

- Coolant Selection:

- Thermal conductivity (thermal conductivity)

- Fluid properties (viscosity, density)

- Chemical compatibility and stability

- Environmental and safety considerations

- Flow path design:

- Minimize pressure loss

- Avoid bubbles and air locks

- Ensure uniform flow distribution

- Optimize flow rate and turbulence

- Redundancy and reliability:

- N+1 or 2N redundant configurations

- Leak detection and protection

- Quick disconnect connections

- Alternate cooling paths

But here’s an interesting phenomenon: the design of a liquid cooling system must balance thermal performance and fluid dynamics considerations. For example, increasing the coolant flow rate can improve heat transfer efficiency, but it also increases pressure loss and pump power requirements. Research shows that most liquid cooling systems have an “optimal flow rate”, usually in the range of 1.5-2.5 GPM (gallons per minute), when thermal performance and pump power are best balanced. Beyond this range, energy efficiency begins to decline as pump power increases outstrip thermal performance gains.

Integrated Thermal Management Design

The cooling system must be tightly integrated with the overall system design:

- Space Planning:

- Reserve sufficient space for cooling components

- Consider maintenance and upgrade access

- Optimize component layout to reduce heat accumulation

- Consider the impact of cable management on airflow

- Electrical Integration:

- Cooling system power supply and redundancy

- Control interface and monitoring integration

- Electromagnetic compatibility (EMC) considerations

- Grounding and safety design

- Noise Management:

- Fan selection and speed control

- Vibration isolation

- Acoustic treatment

- Noise measurement and optimization

Ready for the exciting part? Thermal management should not be an afterthought, but a core component of system design. In high-performance AI systems, thermal limitations often determine the ultimate performance ceiling of the system. For example, a well-designed 8-GPU server may provide 30-40% higher sustained computing performance than an equally configured system with poor cooling, even though the hardware specifications are exactly the same. This is because optimized thermal design allows the accelerator to maintain the highest frequency for a long time without triggering thermal throttling. This “heat first” design philosophy is becoming the standard approach for high-performance AI systems.

3. Selection and implementation of thermal solutions

Selecting and implementing appropriate thermal solutions is a key step in optimizing AI hardware performance, and requires making smart decisions based on specific needs and constraints.

Problem: There is no one-size-fits-all thermal solution, and it needs to be selected based on specific scenarios.

When planning AI system thermals, many organizations try to find the “best” solution, but in reality, the best choice is highly dependent on the specific application scenario, budget, space constraints, and performance requirements.

Aggravation: As AI hardware rapidly develops, thermal requirements are constantly changing, and solutions must be forward-looking.

More worryingly, today’s seemingly adequate thermal solutions may not meet the needs of the next generation of hardware. The TDP of AI accelerators typically increases by 30-50% per generation, which requires thermal solutions to have sufficient expansion margins.

Solution: Understand the pros and cons of various cooling technologies and make smart choices based on your specific needs:

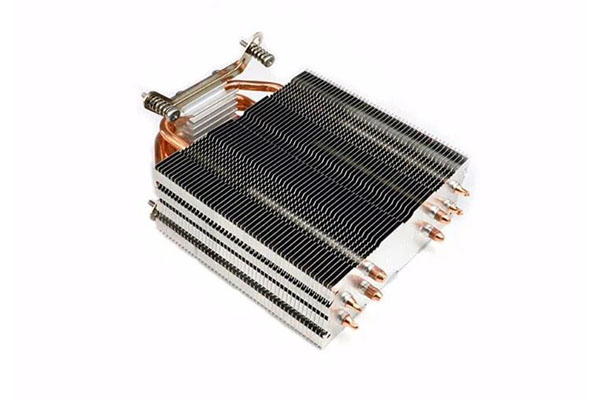

High-performance air cooling solutions

Despite the challenges, optimized air cooling systems are still suitable for many AI application scenarios:

- Advanced air cooling design:

- Large copper-bottom aluminum fin heat sinks

- High static pressure fan arrays

- Heat pipe and vapor chamber technology

- Optimized fin design and coating

- Applicable scenarios:

- Single or dual-GPU workstations

- Medium-density servers (10-20kW/rack)

- Temporary or mobile deployments

- Budget-constrained scenarios

- Implement best practices:

- Ensure adequate heat sink size (at least 250-300% TDP)

- Use high-quality thermal interface materials

- Implement intelligent fan control

- Optimize chassis or server airflow

This is where things get interesting: Although air cooling systems face challenges at extremely high power densities, innovative designs continue to push their limits. For example, the latest air cooling solutions that combine heat pipes, vapor chambers, and efficient fin designs can effectively dissipate 400-500 watts of heat, enough to handle a single high-end AI accelerator. The key lies in system-level optimization—not just the heat sink itself, but also the airflow path, fan selection, and control strategy. A well-designed air cooling system may provide better performance than a system that simply piles up larger components.

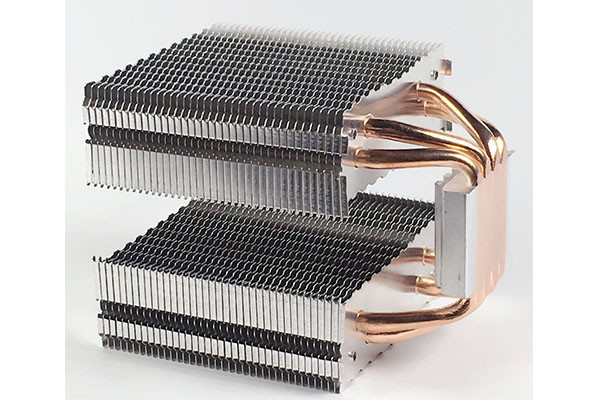

Direct Liquid Cooling Technology

Direct liquid cooling technology provides significantly higher heat dissipation efficiency by bringing the coolant directly to the heat source:

- Cold Plate Liquid Cooling:

- Metal cold plate directly contacts the chip

- Coolant circulates inside the cold plate

- Closed loop system, usually using water or water-glycol mixture

- Can cool a single accelerator of 600-1000 watts

- Microchannel Cooling:

- Cold plate contains micron-scale cooling channels inside

- Increase contact area and improve heat transfer

- May require higher pump pressures

- Provides extremely high heat dissipation density

- Distributed Liquid Cooling:

- Cools multiple components (GPU, CPU, memory, VRM)

- Parallel or series configurations

- Requires complex piping and connections

- Provides comprehensive system cooling

Comparison of Direct Liquid Cooling Technologies

| Technology Type | Cooling Capacity | Implementation Complexity | Cost | Best Application Scenarios |

|---|---|---|---|---|

| Standard cold plate | 600-800W/GPU | Medium | Medium | Multi-GPU server |

| Microchannel cold plate | 800-1200W/GPU | Medium-high | Medium-high | High-density computing node |

| Jet impingement cooling | 1000-1500W/GPU | High | High | Extreme performance requirements |

| Distributed liquid cooling | System-level cooling | High | Medium-high | Complete server cooling |

Immersion cooling technology

Immersion cooling provides the highest heat dissipation efficiency by immersing the entire system in coolant:

- Single-phase immersion cooling:

- Hardware is completely immersed in non-conductive coolant

- Coolant remains in liquid state and dissipates heat through convection

- Simple and reliable, relatively easy to maintain

- Suitable for high-density deployment

- Two-phase immersion cooling:

- Use low boiling point coolant

- Utilize phase change (liquid to gas) to improve heat transfer

- Provide the highest cooling efficiency

- Suitable for extreme density deployments

- Implementation considerations:

- Hardware compatibility verification

- Facility infrastructure requirements

- Maintenance and accessibility

- Personnel training and safety procedures

But here’s the fun part: immersion cooling not only provides higher cooling efficiency, it may also extend hardware life. Temperature fluctuations and hot spots in traditional air-cooled environments can cause components to age faster, while immersion cooling provides a more uniform and stable temperature environment. Some data center operators report a 20-30% reduction in hardware failure rates in immersion cooling systems, which may offset some of the initial investment costs. In addition, immersion systems eliminate fans, reducing vibration and dust accumulation, which are common causes of hardware failures.

Hybrid Cooling Approach

Hybrid cooling approaches combine the advantages of multiple technologies to provide optimized solutions for specific scenarios:

- Partial direct liquid cooling:

- Liquid cools only the main heat source (GPU/CPU)

- Uses traditional air cooling for other components

- Balances performance and implementation complexity

- Suitable for gradual transition to liquid cooling

- Auxiliary phase change cooling:

- Combines air cooling or liquid cooling with phase change materials

- Phase change materials absorb heat peaks

- Provides temperature stability

- Suitable for scenarios with large load fluctuations

- Modular cooling design:

- Supports coexistence of multiple cooling technologies

- Deploys different cooling methods on demand

- Provides technology upgrade paths

- Adapts to different density and budget requirements

Ready for the exciting part? A key advantage of hybrid cooling approaches is that they can provide a gradual upgrade path. For example, a data center can start with partial direct liquid cooling (cooling only GPUs) and gradually transition to full liquid cooling or immersion cooling as density increases without completely rebuilding the infrastructure. This “upgrade on demand” approach can spread the initial investment over a longer period of time while ensuring that the system can adapt to changing needs. Studies have shown that this incremental approach can reduce total cost of ownership (TCO) by 15-25% while reducing the risk of technology transitions.

4. Cooling System Monitoring and Optimization

Effective monitoring and continuous optimization are key to maintaining the long-term performance of AI hardware cooling systems, which can significantly improve system reliability and efficiency.

Problem: Static cooling solutions cannot adapt to the dynamic nature of AI workloads.

Many organizations neglect to continuously monitor and optimize cooling systems after initial deployment, resulting in performance degradation, reduced energy efficiency, and reliability issues.

Exacerbation: As hardware ages and workloads change, cooling requirements change.

More worryingly, cooling system performance may degrade over time – aging of thermal interface materials, changes in coolant properties, reduced fan efficiency, etc., these changes can cause serious problems if not monitored and addressed.

Solution: Implement a comprehensive monitoring system and continuous optimization strategy:

Best Practices for Temperature Monitoring

Comprehensive temperature monitoring is the foundation of thermal management:

- Monitoring point selection:

- Chip internal temperature sensor

- Radiator/cold plate temperature

- Inlet/outlet air temperature

- Ambient temperature

- Liquid cooling system inlet and outlet water temperature

- Monitoring frequency and accuracy:

- More frequent sampling at high loads

- Ensure sufficient temperature resolution (±0.5°C)

- Record temperature trends and fluctuations

- Set appropriate alarm thresholds

- Visualization and analysis:

- Real-time temperature map

- Historical trend analysis

- Correlation analysis (load vs temperature)

- Anomaly detection algorithm

This is where things get interesting: modern AI accelerators often contain multiple internal temperature sensors, not a single reading. For example, NVIDIA A100 and H100 GPUs have up to 20 internal temperature sensors distributed in different areas. Accessing and analyzing this detailed data can provide deep insights into the thermal behavior of the chip, identifying local hot spots that may be masked by the overall temperature. Some advanced monitoring systems use this data to create real-time “heat maps” that show the temperature distribution inside the chip, helping to identify potential problems and optimize cooling strategies.

Performance-related monitoring

Temperature monitoring must be combined with performance indicators to fully understand the system status:

- Key performance indicators:

- Clock frequency (to detect thermal throttling)

- Power consumption (TDP utilization)

- Compute utilization

- Memory bandwidth

- Throughput and latency

- Thermal throttling detection:

- Automatically identify frequency reduction events

- Correlate temperature data

- Quantify performance impact

- Trigger optimization measures

- Workload characterization:

- Identify thermally intensive operations

- Analyze load pattern and temperature relationship

- Optimize workload scheduling

- Predict cooling needs

Cooling system monitoring indicators

| Monitoring category | Key indicators | Ideal range | Warning threshold | Monitoring frequency |

|---|---|---|---|---|

| Temperature monitoring | GPU core temperature | 65-80°C | >85°C | 1-5 seconds |

| Memory temperature | 60-75°C | >80°C | 5-10 seconds | |

| Inlet/outlet temperature difference | 5-15°C | >20°C | 10-30 seconds | |

| Performance monitoring | GPU frequency | 90-100% of nominal frequency | <85% | 1-5 seconds |

| Power consumption | Varies with workload | Sudden drop | 1-5 seconds | |

| Computing efficiency | Application-dependent | Significant drop | 10-30 seconds | |

| Cooling system | Fan speed | 40-70% | >85% | 5-10 seconds |

| Liquid cooling flow | 1.5-2.5 GPM/GPU | <1.2 GPM | 10-30 seconds | |

| Liquid cooling temperature difference | 5-10°C | <3°C or >15°C | 10-30 seconds |

Cooling system optimization strategy

Continuous optimization based on monitoring data can significantly improve cooling system performance:

- Active cooling control:

- Fan control based on workload prediction

- Dynamic adjustment of liquid cooling system flow and temperature

- Adaptive power capping

- Thermally aware workload scheduling

- Regular maintenance optimization:

- Thermal interface material replacement plan

- Fan cleaning and performance testing

- Liquid cooling system flushing and treatment

- Airflow path inspection and optimization

- System-level adjustment:

- Optimize BIOS/firmware settings

- Power and temperature limit fine-tuning

- Fan curve customization

- Workload distribution optimization

But here’s an interesting phenomenon: cooling optimization is not only about hardware, but also about software and workload management. For example, some AI training frameworks allow the implementation of a “thermal-aware training” strategy that automatically adjusts the batch size or accuracy when it detects that the temperature is approaching a threshold to reduce thermal output while minimizing performance impact. This combination of software and hardware can reduce thermal throttling events by 80-90%, while sacrificing only 5-10% of training speed. In contrast, passive thermal throttling can result in a 20-30% performance loss.

Fault Detection and Prevention

Proactive fault detection and prevention can avoid costly downtime and hardware damage:

- Early Warning System:

- Machine Learning-based Anomaly Detection

- Temperature Pattern Change Identification

- Performance Degradation Trend Analysis

- Predictive Maintenance Alerts

- Fail-Safe Mechanism:

- Automatic Power Limitation

- Emergency Cooling Mode

- Safe Shutdown Procedure

- Redundant System Switchover

- Root Cause Analysis:

- Detailed Event Logging

- Temperature and Performance Data Correlation

- System Behavior Reconstruction

- Preventive Action Implementation

Ready for the exciting part? Predictive thermal management is becoming a key differentiator for high-value AI systems. Machine learning models trained with historical data can detect subtle temperature and performance pattern changes that may indicate impending problems. For example, a leading AI research organization reported that their predictive system was able to detect thermal anomalies an average of 7-10 days before actual failures occurred, providing ample time for preventive maintenance and avoiding dozens of potential training interruptions. Considering that the cost of training large AI models can run into hundreds of thousands of dollars, the ROI of this preventative approach is extremely high.

5. Future cooling technologies and trends

AI hardware cooling is experiencing rapid innovation, and understanding emerging technologies and trends is critical to developing forward-looking cooling strategies.

Problem: Current cooling technologies may not meet the needs of next-generation AI hardware.

As AI accelerator power continues to grow, it is expected that the TDP of a single chip may reach 1000-1500 watts in the next five years, which will exceed the capabilities of many existing cooling solutions.

Exacerbation: The evolution of chip architecture and packaging technology creates new cooling challenges.

More worryingly, emerging technologies such as 3D stacking, chip-level interconnects, and heterogeneous integration are changing thermal distribution characteristics, creating unprecedented cooling challenges such as interlayer heat conduction and cooling of buried hot spots.

Solution: Explore cutting-edge cooling technologies and trends to prepare for future challenges:

Chip-level integrated cooling

Chip-level integrated cooling represents a major paradigm shift in cooling technology:

- Silicon microchannel cooling:

- Cooling channels are etched directly into the silicon

- Eliminate thermal interface materials

- Significantly reduce thermal resistance

- Support very high power density

- Backside cooling:

- Dissipate heat from the back of the chip through through silicon vias (TSVs)

- Allow for double-sided cooling

- Compatible with 3D stacking architectures

- Increase heat dissipation area

- Embedded heat pipes and vapor chambers:

- Phase change cooling structures are integrated into the package

- Improve heat spreading efficiency

- Reduce hot spots

- Reduce overall thermal resistance

This is where things get interesting: Chip-level integrated cooling not only improves performance, it also has the potential to change the way chips are designed. When cooling becomes an intrinsic part of chip design rather than an external system, architects can rethink power distribution and thermal density constraints. For example, companies such as Intel and IBM are working on “thermally aware” chip designs that dynamically adjust power allocation to different areas based on cooling capabilities. This co-design approach could potentially improve chip performance by 20-30% while maintaining the same overall thermal output.

New heat dissipation materials and interfaces

Innovations in materials science are driving breakthroughs in heat dissipation performance:

- Graphene and carbon nanotube materials:

- Thermal conductivity is 5-10 times that of copper

- Lightweight and flexible

- Can form composite materials and coatings

- Suitable for a variety of heat dissipation applications

- Liquid metal thermal interface:

- Thermal conductivity is 5-10 times that of traditional silicone grease

- Perfectly fits the contact surface

- Reduces interface thermal resistance by 70-80%

- Suitable for high-end AI accelerators

- Phase change material (PCM) innovation:

- Intelligent temperature response material

- High heat capacity buffers thermal peaks

- Self-healing and long-life formulations

- Works in synergy with other cooling technologies

Comparison of emerging heat dissipation technologies

| Technology category | Thermal performance improvement | Expected commercialization time | Main advantages | Main challenges |

|---|---|---|---|---|

| Silicon microchannel cooling | 50-100% | 3-5 years | Very low thermal resistance | Manufacturing complexity |

| Backside cooling of chips | 30-60% | 2-4 years | Double-sided heat dissipation | Package compatibility |

| Graphene composites | 40-80% | Early products available | Lightweight and efficient | Mass production |

| Liquid metal TIM | 50-70% | Commercially available | Very low interfacial thermal resistance | Difficulty of application |

| Phase change smart materials | 20-40% | 1-3 years | Temperature stability | Long-term reliability |

Intelligent thermal management system

AI-driven intelligent thermal management is revolutionizing the way cooling systems operate:

- AI-optimized cooling control:

- Machine learning predicts thermal load

- Adaptive cooling parameter adjustment

- Multivariable optimization algorithm

Continuous learning and improvement

- Digital twin technology:

- Real-time thermal model simulation

- Virtual sensing and prediction

- What-if scenario analysis

- Optimization strategy testing

- Distributed intelligent control:

- Edge computing thermal management

- Autonomous decision-making unit

- Inter-system collaborative optimization

- Fault self-healing capability

But here’s the interesting thing: the value of intelligent cooling systems grows exponentially with scale and complexity. In small deployments, simple control strategies may be sufficient; but in large AI clusters, AI-driven optimization may discover complex patterns and optimization opportunities that are difficult for human operators to identify. For example, Google reported that after implementing DeepMind AI control systems in its data centers, cooling energy consumption was reduced by 40% while improving temperature stability. This “AI cooling AI” approach represents a powerful synergy that may become the standard for future high-performance computing facilities.

Sustainable Cooling Trends

Sustainability considerations are reshaping the direction of cooling technology:

- Energy efficiency innovations:

- Ultra-efficient cooling system design

- Energy recovery technology

- Low power consumption control systems

- Passive and hybrid cooling methods

- Waste heat utilization technology:

- High-efficiency heat exchangers

- Cascade heat utilization systems

- Thermoelectric conversion technology

- Integration with building systems

- Environmentally friendly materials and refrigerants:

- Low GWP (global warming potential) refrigerants

- Bio-based and recyclable materials

- Non-toxic coolants

- Closed-loop resource circulation

Ready for the exciting part? Sustainable cooling is not only an environmental responsibility, it can also be an economic advantage. As energy costs rise and carbon pricing mechanisms expand, the return on investment for efficient cooling systems is improving. For example, a liquid-cooled data center with waste heat recovery may save 30-50% in energy costs compared to traditional air-cooled facilities while creating an additional thermal energy revenue stream. In addition, some regions have begun to implement data center carbon emission limits, making sustainable cooling technology go from “good practice” to a regulatory necessity. This economic and regulatory trend is accelerating the adoption of sustainable cooling technology, which is expected to become mainstream within the next five years.

FAQ

Q1: How do I determine what type of cooling solution my AI system needs?

Determining the best cooling solution for an AI system requires considering multiple factors: First, evaluate the thermal load characteristics, including total power (TDP), power density, hot spot distribution, and load mode. For example, a single GPU workstation (300-400W) can usually use high-performance air cooling, a 4-8GPU server (1200-3200W) may require direct liquid cooling, and a high-density AI cluster (>30kW/rack) may require immersion cooling. Second, consider the deployment environment, including space constraints, noise requirements, existing infrastructure, and environmental conditions. For example, an office environment may prioritize low-noise solutions, while a data center may be more concerned with density and efficiency. Third, evaluate performance requirements, including whether continuous maximum performance is required, temperature stability requirements, and the possibility of over-frequency. Finally, consider the total cost of ownership (TCO), including initial investment, operating costs, maintenance requirements, and expected service life. For most enterprise AI deployments, a phased approach is recommended: start with a conservative design that includes sufficient expansion margins and optimize based on actual usage data. For critical systems, thermal simulations and small-scale testing can provide valuable decision-making evidence and avoid costly mistakes.

Q2: What are the main advantages and challenges of liquid cooling systems compared to traditional air cooling?

Liquid cooling systems have significant advantages and specific challenges compared to traditional air cooling: The main advantages include higher heat dissipation efficiency. The heat capacity of liquid is about 3500-4000 times that of air, which enables liquid cooling systems to remove heat more effectively; more stable temperature, reducing hot spots and temperature fluctuations, and improving the consistency of AI training; significantly reduced noise, eliminating or reducing fan noise; support for higher density deployment, single rack power can reach 50-100kW, which is 3-5 times that of traditional air cooling; higher energy efficiency, which can reduce PUE from 1.6-2.0 to 1.1-1.3; higher waste heat quality, generating 45-60°C waste heat, which is more suitable for recycling. The main challenges include higher initial cost, system investment may be 2-3 times that of air cooling; increased implementation complexity, requiring expertise and design; leakage risk, requiring appropriate detection and protection measures; maintenance and accessibility, some components may be more difficult to access; hardware compatibility, not all servers are designed for liquid cooling; facility requirements, which may require additional infrastructure such as water pipes, heat exchangers, etc. For most high-performance AI deployments, the long-term advantages of liquid cooling systems usually outweigh these challenges, especially when considering energy cost savings, density increases, and performance improvements. The payback period is usually 2-4 years, depending on energy costs and utilization.

Q3: How does temperature fluctuation affect AI training performance and results?

Temperature fluctuations have multiple effects on AI training performance and results: First, direct performance impact, modern AI accelerators automatically reduce clock speeds when reaching a temperature threshold (usually 85-95°C). This thermal throttling may cause a 15-30% performance drop, directly extending the training time. For example, a training task that originally took 7 days may be extended to 9-10 days. Secondly, the impact of training stability, temperature fluctuations may lead to inconsistent calculations, affecting optimizer behavior and convergence. Studies have shown that models trained in temperature-unstable environments may have a final test accuracy that is 1-2 percentage points lower, which can be a significant difference in high-competition fields. Third, batch size limitations, to avoid thermal throttling, the batch size may need to be reduced, which may affect model convergence characteristics and final quality. Finally, the impact of hardware reliability, frequent temperature cycles increase physical stress, which may cause microcracks, solder joint fatigue, and interconnect failure, increasing the risk of training interruption. The best practice is to maintain a stable temperature environment, ideally with temperature fluctuations within ±5°C. This usually requires an efficient heat dissipation system and intelligent temperature control strategies, such as predictive fan control or liquid cooling systems. For critical training tasks, it is also important to monitor and record temperature data, which can help explain potential training anomalies and improve future deployments.

Q4: How to optimize the heat dissipation performance of existing AI systems without completely replacing the hardware?

There are several cost-effective ways to optimize the thermal performance of existing AI systems: First, upgrade the thermal interface material. Replacing standard silicone grease with high-performance thermal conductive silicone grease or liquid metal can reduce the interface thermal resistance by 30-80%, reducing the chip temperature by 5-15°C. This is one of the optimizations with the highest return on investment, and the cost is usually $10-50 per GPU. Second, airflow optimization. Add or reposition guide plates, seal airflow leaks, and optimize cable management. These improvements may reduce temperatures by 3-8°C at very low costs. Third, fan upgrades and control optimization. Replacing with high static pressure fans and implementing smarter fan control curves can increase airflow by 20-40% at the same noise level. Fourth, heat sink enhancement. Adding heat pipes or vapor chambers, applying thermal conductive coatings, or simply increasing the size of the heat sink can improve heat dissipation efficiency by 15-30%. Fifth, software optimization. Implementing power caps, optimizing workload scheduling, and using thermally aware training techniques. These methods can reduce heat output without affecting results. Finally, environmental optimization. Reduce ambient temperature, improve rack or chassis ventilation, and optimize equipment layout to reduce hot air recirculation. These optimizations can often be used in combination, with significant cumulative effects. For example, a comprehensive optimized system may reduce peak temperatures by 15-25°C, significantly reduce thermal throttling and improve performance, with a payback period typically within months.

Q5: What are the main trends in AI hardware cooling in the next five years?

The main trends in AI hardware cooling in the next five years include: In terms of technology integration, chip-level integrated cooling will integrate cooling functions directly into chip design and packaging, such as silicon microchannels, chip back cooling, and embedded heat pipes, which are expected to be commercialized within 3-5 years; heterogeneous cooling will optimize different cooling methods for different components to create highly customized system-level solutions; modular liquid cooling will simplify deployment and maintenance and provide plug-and-play solutions. In terms of material innovation, nanomaterials such as graphene, carbon nanotubes, and nanofluids will provide breakthrough thermal performance; phase-change smart materials will automatically adjust properties based on temperature; and environmentally friendly materials will reduce environmental impact. In terms of intelligent management, AI-driven predictive cooling will use machine learning to optimize cooling parameters; digital twin technology will provide real-time simulation and optimization; and edge intelligence will enable distributed cooling decisions. In terms of sustainability trends, waste heat recovery will become standard practice; closed-loop water systems will minimize water consumption; and energy optimization will further reduce cooling energy consumption. Together, these trends point to a more integrated, smarter, and more sustainable cooling future, with AI hardware cooling efficiency expected to increase 2-3 times by 2030, while energy consumption and environmental impact will be significantly reduced. For organizations, the key is to take a forward-looking approach, choose cooling solutions with upgrade paths, and keep a close eye on innovations in this rapidly evolving field.