Introduction

The artificial intelligence revolution has fundamentally transformed the landscape of computing hardware, with graphics processing units (GPUs) emerging as the cornerstone of AI infrastructure. As AI models grow increasingly complex and computationally intensive, the thermal challenges associated with high-performance GPUs have reached unprecedented levels. This comprehensive article explores the evolution of GPU cooling technology in response to the demands of the AI era, examining how cooling solutions have adapted to enable the next generation of artificial intelligence.

The AI-Driven GPU Revolution

The rise of artificial intelligence has catalyzed a fundamental shift in GPU design, capability, and thermal characteristics.

Problem: The computational demands of modern AI have driven GPU manufacturers to create increasingly powerful processors that generate unprecedented levels of heat.

Today’s AI-focused GPUs like NVIDIA’s H100 or AMD’s MI300 can consume 400-700 watts of power—more than triple what gaming GPUs required just a few years ago. This dramatic increase in power consumption creates thermal challenges that traditional cooling approaches struggle to address effectively.

Aggravation: The trend toward higher GPU power consumption shows no signs of abating, with next-generation AI accelerators potentially exceeding 1000W.

Further complicating matters, AI workloads typically maintain GPUs at near 100% utilization for extended periods—sometimes weeks or months—creating sustained thermal loads fundamentally different from gaming or general computing workloads with their variable utilization patterns.

Solution: A new generation of cooling technologies has evolved specifically to address the thermal challenges of AI-focused GPUs:

The Transformation of GPU Architecture

Understanding how AI has reshaped GPU design:

- From Gaming to AI Computing:

- Early GPUs (2000-2010): Primarily designed for graphics rendering

- Transition era (2010-2015): General-purpose GPU computing emerges

- AI acceleration era (2015-present): Specialized AI architecture development

- Architectural optimizations for matrix operations

- Dedicated tensor cores for AI workloads

- The Scale of Modern AI GPUs:

- Die size expansion (300mm² to 800mm²+)

- Transistor count explosion (billions to trillions)

- Memory capacity and bandwidth increases

- Multi-chip module implementations

- Specialized interconnect technologies

- Power Consumption Trajectory:

- Early CUDA GPUs (2010-2015): 150-250W TDP

- Early AI-focused GPUs (2016-2018): 250-300W TDP

- Middle AI GPU Era (2019-2021): 300-400W TDP

- Current AI GPU Era (2022-2024): 350-700W TDP

- Projected Next-Gen (2025+): 600-1000W+ TDP

Here’s what makes this fascinating: The thermal output of AI GPUs has grown at approximately 2.5x the rate predicted by Moore’s Law. While traditional computing hardware typically sees 15-20% power increases per generation, AI accelerators have experienced 50-100% TDP increases across recent generations. This accelerated thermal evolution reflects a fundamental shift in design philosophy, where performance is prioritized even at the cost of significantly higher power consumption and thermal output.

The Economic Value of AI Computation

Understanding the business drivers behind cooling innovation:

- AI Training Economics:

- Large model training costs: $100,000 to $10+ million

- Training time impact on time-to-market

- Computational capacity as competitive advantage

- Research and development velocity dependencies

- Model quality correlation with computational resources

- AI Inference Value Proposition:

- Real-time response requirements

- Throughput economics for cloud providers

- Edge deployment thermal constraints

- Service level agreement implications

- Operational cost sensitivity

- The Cost of Thermal Limitations:

- Performance degradation from throttling (10-30%)

- Extended training times increasing costs

- Reduced hardware lifespan from thermal stress

- Density constraints limiting computational capacity

- Opportunity costs of delayed AI implementation

But here’s an interesting phenomenon: The economic value of AI computation has created unprecedented incentives for solving thermal limitations. While cooling was historically viewed as a necessary but low-value expense, it has become a strategic investment with significant ROI potential in the AI era. Organizations are now willing to invest substantially more in cooling infrastructure because the opportunity cost of computational limitations far exceeds the premium paid for advanced cooling technologies.

The Cooling Technology Imperative

Recognizing cooling as a critical enabler of AI advancement:

- Performance Preservation:

- Thermal throttling prevention

- Clock speed stability maintenance

- Memory bandwidth protection

- Consistent computational throughput

- Benchmark performance achievement

- Reliability and Longevity:

- Temperature impact on failure rates

- Thermal cycling reduction

- Component lifespan extension

- Error rate minimization

- Investment protection

- Density and Scaling Enablement:

- Multi-GPU configurations

- High-density server designs

- Rack-level thermal management

- Data center capacity optimization

- Computational capacity per square foot

The Evolution of GPU Cooling Requirements

| Era | Typical GPU TDP | Primary Cooling Approach | Cooling Challenges | Performance Impact of Inadequate Cooling |

|---|---|---|---|---|

| Gaming Era (2000-2010) | 100-250W | Air cooling with heat pipes | Noise, limited density | Occasional throttling, primarily in summer |

| Early GPGPU (2010-2015) | 150-300W | Advanced air, early liquid | Multi-GPU configurations, workstation density | Periodic throttling under sustained loads |

| Early AI (2015-2018) | 250-350W | Liquid cooling adoption | Data center deployment, rack density | 10-15% performance impact, reliability concerns |

| Modern AI (2019-2022) | 300-450W | Direct liquid cooling | Scaling to large clusters, efficiency | 15-25% performance impact, significant throttling |

| Current AI (2022-2024) | 400-700W | Advanced liquid, immersion | Extreme density, facility limitations | 20-40% performance impact, thermal design dominance |

| Next-Gen AI (2025+) | 600-1000W+ | Immersion, two-phase, emerging | Power delivery, facility constraints | Potentially unusable without advanced cooling |

Ready for the fascinating part? The cooling technology for GPUs has evolved more rapidly in the past five years than in the previous twenty years combined. This accelerated evolution is being driven by the economic value of AI computation, which creates unprecedented incentives for solving thermal limitations that constrain AI performance. Organizations at the cutting edge are now implementing cooling technology roadmaps that plan for multiple technology transitions within a single hardware generation, fundamentally changing how cooling infrastructure is designed and deployed.

Traditional GPU Cooling: Origins and Limitations

To understand the current state of GPU cooling technology, it’s essential to examine its origins and the limitations that have driven innovation.

Problem: Traditional GPU cooling approaches were designed for consumer graphics cards with substantially different thermal characteristics than modern AI accelerators.

Conventional GPU cooling was optimized for processors with moderate heat density, variable workloads, and consumer form factors—a far cry from the sustained high-power operation of data center AI accelerators.

Aggravation: The physical form factors and mounting requirements of data center GPUs differ significantly from consumer cards, complicating cooling design and implementation.

Further complicating matters, data center GPUs feature varying die sizes, component layouts, and mounting patterns across different models and generations, requiring cooling solutions that can adapt to these differences while maintaining optimal performance.

Solution: Understanding the evolution and limitations of traditional cooling approaches provides context for the innovations driving modern GPU cooling technology:

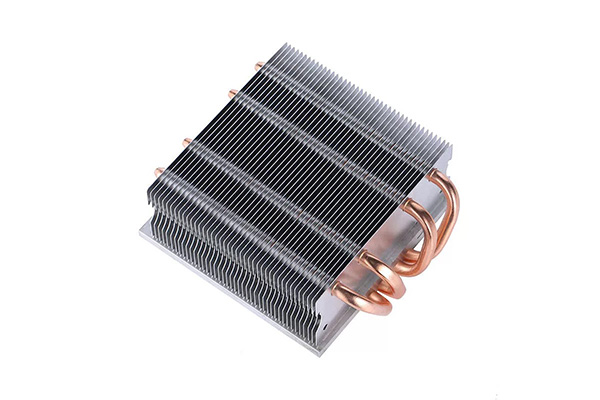

The Consumer GPU Cooling Legacy

Tracing the development of early cooling solutions:

- Passive Cooling Era (Pre-2000):

- Simple aluminum heatsinks

- Natural convection cooling

- Limited thermal capacity (30-50W)

- Minimal surface area optimization

- Basic thermal interface materials

- Active Air Cooling Evolution (2000-2010):

- Single fan implementations

- Basic heat pipe introduction

- Copper base plate adoption

- Fin density optimization

- Noise management considerations

- Advanced Air Cooling Refinement (2010-2020):

- Multi-fan configurations

- Advanced heat pipe arrays

- Vapor chamber implementation

- Computational fluid dynamics optimization

- Acoustic engineering advancements

Here’s what makes this fascinating: The thermal conductivity requirements for AI accelerator cooling exceed those of traditional GPU cooling by 2-3x due to the extreme heat density. While a gaming GPU might generate 0.3-0.5 W/mm², modern AI GPUs can produce 0.5-1.0 W/mm², requiring fundamentally different approaches to heat capture and dissipation. This heat density differential has driven a complete rethinking of GPU cooling design, with solutions that would have been considered excessive for gaming becoming baseline requirements for AI accelerators.

The Data Center GPU Transition

Understanding the shift to enterprise-grade cooling:

- Early Data Center GPU Cooling (2010-2015):

- Passive server-grade heatsinks

- High static pressure server fans

- Blower-style coolers for density

- Basic thermal management

- Limited thermal monitoring

- Rack-Level Cooling Considerations:

- Airflow management challenges

- Front-to-back cooling constraints

- Density limitations (4-8 GPUs per server)

- Power and cooling balance

- Hot spot management

- Facility-Level Thermal Challenges:

- Air handling capacity constraints

- Temperature rise in data halls

- Cooling infrastructure limitations

- Energy efficiency concerns

- Scaling difficulties for large deployments

But here’s an interesting phenomenon: The cooling challenge for AI accelerators represents a fundamental inversion of traditional computing heat patterns. In traditional systems, CPUs typically generate 60-70% of the total heat, with GPUs as secondary contributors. In modern AI systems, GPUs often account for 70-80% of the total thermal output, with CPUs reduced to a secondary heat source despite their own substantial thermal output. This inversion requires a complete rethinking of system thermal design, with cooling resources allocated proportionally to this new heat distribution.

Physical and Practical Limitations

Identifying the constraints of traditional approaches:

- Air Cooling Physical Constraints:

- Thermal conductivity limitations of air

- Airflow restrictions in dense environments

- Fan power and noise limitations

- Heat sink size and weight constraints

- Temperature uniformity challenges

- Thermal Interface Limitations:

- Contact resistance between components

- Thermal interface material performance limits

- Surface flatness and pressure distribution

- Long-term reliability and pump-out effects

- Assembly and manufacturing challenges

- Scaling and Density Barriers:

- Practical limit of 350-400W per device with air

- Density limitations (4-6 high-power GPUs per server)

- Rack power density constraints (15-25kW typical)

- Airflow interference between devices

- System-level thermal management complexity

Limitations of Traditional GPU Cooling Approaches

| Limitation | Impact on AI Workloads | Technical Constraint | Practical Consequence | Innovation Driver |

|---|---|---|---|---|

| Air Thermal Conductivity | Insufficient heat transfer | Physics of air as medium | Temperature rise, throttling | Liquid cooling adoption |

| Heat Sink Size Constraints | Inadequate surface area | Physical space in servers | Cooling capacity limitation | Advanced materials, density |

| Fan Noise and Power | Efficiency and environment issues | Cubic relationship of airflow to power | Energy waste, workplace disruption | Alternative cooling media |

| Thermal Interface Efficiency | Bottleneck in heat transfer | Material limitations, contact physics | Performance inconsistency | Direct liquid contact, immersion |

| Density Limitations | Restricted computational capacity | Spacing requirements for airflow | Facility space inefficiency | Liquid and immersion density enabling |

The Transition Point to Advanced Cooling

Identifying when traditional approaches become inadequate:

- Thermal Performance Indicators:

- GPU core temperature exceeding 80-85°C

- Significant thermal throttling under load

- Temperature variation between devices

- Inability to maintain boost clocks

- Memory temperature limitations

- Operational Impact Signals:

- Extended training times due to throttling

- Inconsistent benchmark performance

- Reliability issues and increased failures

- Fan noise becoming problematic

- Power consumption concerns

- Scaling and Growth Limitations:

- Density requirements exceeding air capabilities

- Facility cooling capacity constraints

- Energy efficiency targets unachievable

- Expansion plans requiring alternative approaches

- Competitive disadvantage from capability limitations

Ready for the fascinating part? The transition point from air to liquid cooling has shifted dramatically over time. In 2018, liquid cooling was typically considered necessary only for GPUs exceeding 300W. By 2020, this threshold had increased to 350W, and by 2022, some advanced air cooling solutions could handle up to 400W. However, this trend has reversed with the latest generation of AI accelerators, with liquid cooling now recommended for GPUs above 350W due to the sustained nature of AI workloads. This “thermal threshold evolution” reflects both advances in air cooling technology and changes in workload characteristics that affect cooling requirements.

The Thermal Challenge of AI Workloads

The unique characteristics of AI workloads create thermal challenges fundamentally different from traditional computing applications.

Problem: AI workloads generate thermal profiles that are more intense, sustained, and challenging than those of general computing or even high-performance gaming.

The combination of maximum utilization, extended run times, and minimal idle periods creates thermal conditions that push cooling sTo save on context only part of this file has been shown to you. You should retry this tool after you have searched inside the file with grep -n in order to find the line numbers of what you are looking for.

FAQ

Q1: Why is GPU cooling technology important in the AI era?

GPU cooling is crucial in the AI era because AI workloads, particularly deep learning and neural networks, demand significant computational power from GPUs. These high-performance tasks generate a large amount of heat, which can reduce performance, cause thermal throttling, and even damage components over time. Advanced cooling technologies help maintain optimal temperatures, ensuring that GPUs can perform at their best without overheating. Efficient cooling systems are essential for ensuring long-term reliability and maximizing processing power, especially in data centers and AI research environments.

Q2: What are the key advancements in GPU cooling technology for AI applications?

Several innovations in GPU cooling have emerged to meet the demands of AI applications. One significant advancement is the shift from traditional air cooling to liquid cooling systems, which provide more efficient heat dissipation. Liquid cooling solutions, such as direct-to-chip coolers and immersion cooling, allow for better temperature regulation, enabling GPUs to handle the heavy computational load required for AI tasks. Additionally, advancements in materials, like graphene and heat pipes, have improved the thermal conductivity of cooling solutions, leading to more effective heat management.

Q3: How does the increased demand for AI impact GPU cooling requirements?

As AI technologies continue to grow in complexity, the demand for more powerful GPUs with higher processing capabilities also increases. This results in a greater need for effective cooling solutions. The rise of AI-powered systems, such as self-driving cars, large-scale data centers, and real-time processing applications, has pushed the limits of traditional cooling methods. To meet these growing demands, GPU cooling systems are evolving to support higher thermal loads and more compact designs while maintaining reliability and efficiency.

Q4: What role does air cooling still play in the AI era?

Despite the rise of liquid cooling, air cooling still plays a crucial role in many AI systems, particularly in smaller setups or consumer-grade hardware. High-performance air coolers equipped with larger heatsinks and multiple fans are capable of effectively managing the heat generated by AI workloads, though they may not offer the same efficiency as liquid cooling. For many applications, air cooling remains an affordable and practical solution, offering simplicity, ease of maintenance, and cost-effectiveness for users with less intensive AI requirements.

Q5: How are AI-driven innovations influencing future GPU cooling technology?

AI is influencing the development of smarter, more adaptive cooling solutions. Using AI to optimize thermal management in real-time, GPU cooling systems can automatically adjust fan speeds, pump flow rates, or cooling cycles based on workload and temperature fluctuations. This dynamic approach helps maintain optimal temperatures without wasting energy. Additionally, AI-based monitoring and predictive analytics are being integrated into GPU cooling systems, allowing for proactive maintenance and early detection of potential cooling failures, further enhancing performance and system longevity.