Introduction

The explosive growth of artificial intelligence has driven unprecedented demand for specialized computing hardware, with Graphics Processing Units (GPUs) emerging as the cornerstone of modern AI infrastructure. As AI models grow increasingly complex, the thermal challenges associated with high-performance GPUs have become a critical consideration in system design. This article explores the crucial role of Thermal Design Power (TDP) in AI GPU selection, providing a comprehensive framework for balancing performance requirements with cooling capabilities.

Understanding Thermal Design Power in the AI Context

Thermal Design Power (TDP) represents one of the most critical yet frequently misunderstood specifications in GPU selection for AI infrastructure.

Problem: TDP is often incorrectly interpreted as the maximum power consumption of a GPU, leading to inadequate cooling provisions.

Many organizations select GPUs based primarily on computational capabilities without fully understanding the thermal implications, resulting in systems that cannot sustain peak performance due to thermal constraints.

Aggravation: AI workloads typically drive GPUs to sustained maximum utilization, unlike traditional computing tasks with variable load patterns.

Further complicating matters, AI training and inference workloads often maintain near-100% GPU utilization for extended periods—sometimes weeks or months—creating thermal challenges that are fundamentally different from traditional computing workloads with their variable utilization patterns.

Solution: A nuanced understanding of TDP in the AI context enables more effective hardware selection and cooling system design:

Defining TDP for AI Applications

The technical definition and practical implications of TDP in AI contexts:

- Technical Definition:

- Thermal Design Power measured in watts (W)

- Represents the average power dissipated as heat under typical workloads

- Manufacturer-specified value for cooling system design

- Not necessarily the maximum power consumption

- Baseline for cooling solution requirements

- AI-Specific Considerations:

- AI workloads typically exceed “typical” assumptions

- Sustained maximum utilization for extended periods

- Higher average power consumption than general computing

- Minimal idle or low-power periods

- Greater thermal management challenges

- TDP vs. Actual Power Consumption:

- Modern AI GPUs often exceed stated TDP during peak operations

- Power limit (PL) settings can allow 10-30% higher consumption

- Dynamic boosting technologies temporarily increase power draw

- Actual thermal output can exceed TDP by 15-25%

- Cooling systems must account for these excursions

Here’s a critical insight: The relationship between stated TDP and actual thermal output in AI workloads is not straightforward. Research indicates that modern AI accelerators can exceed their nominal TDP by 15-30% during sustained AI training, particularly when power limits are adjusted for maximum performance. This “TDP gap” means that cooling systems designed exactly to the stated TDP specifications will likely be insufficient for maintaining optimal performance in AI applications.

The Physics of GPU Heat Generation

Understanding the fundamental thermal characteristics of modern GPUs:

- Heat Generation Mechanisms:

- Dynamic power consumption from transistor switching

- Static power consumption from leakage current

- Power delivery system losses

- Memory subsystem thermal contribution

- Interconnect and I/O thermal output

- Thermal Density Considerations:

- Modern AI GPUs: 0.5-1.0 W/mm² thermal density

- Highly non-uniform heat distribution across die

- Hotspots can reach 2-3x average thermal density

- Cooling solution must address peak density, not just total TDP

- Die size and packaging significantly impact cooling approach

- Temporal Thermal Characteristics:

- Rapid temperature changes during workload transitions

- Thermal capacitance and response time considerations

- Sustained vs. burst thermal loads

- Cooling system response capabilities

- Thermal throttling thresholds and behaviors

But here’s an interesting phenomenon: The thermal behavior of GPUs under AI workloads differs significantly from traditional computing patterns. While gaming or general computing workloads create variable thermal loads with frequent opportunities for cooling recovery, AI training creates a “thermal plateau” of sustained maximum heat output that can last for days or weeks. This fundamentally changes the cooling challenge from managing periodic thermal spikes to maintaining continuous cooling capacity for extended durations.

Performance Impact of Thermal Constraints

The relationship between thermal management and AI performance is direct and significant:

- Thermal Throttling Mechanisms:

- Automatic clock reduction at temperature thresholds

- Power limit enforcement based on thermal conditions

- Memory bandwidth throttling during thermal events

- Voltage and frequency scaling in response to temperature

- Performance impact of 10-30% during throttling

- Performance Stability Considerations:

- Consistent performance requires stable temperatures

- Thermal fluctuations cause clock speed variations

- Training convergence affected by performance inconsistency

- Reproducibility challenges with variable thermal conditions

- Benchmark results vs. sustained production performance

- Thermal Headroom Benefits:

- Additional thermal headroom enables sustained boost clocks

- Overclocking potential for increased performance

- Reduced throttling frequency and duration

- More consistent training and inference results

- Improved hardware utilization efficiency

Impact of Thermal Management on AI GPU Performance

| Thermal Condition | Performance Impact | Training Implications | Inference Implications |

|---|---|---|---|

| Optimal Cooling | 100% performance, sustained boost | Fastest training, consistent convergence | Maximum throughput, consistent latency |

| Borderline Cooling | 90-95% performance, intermittent throttling | 5-10% longer training time, minor convergence issues | Throughput variability, occasional latency spikes |

| Inadequate Cooling | 70-85% performance, frequent throttling | 15-30% longer training time, potential convergence problems | Significant throughput reduction, unpredictable latency |

| Critical Thermal Issues | <70% performance, severe throttling | Failed training runs, numerical instability | Service disruptions, timeout failures |

Ready for the fascinating part? The relationship between cooling quality and AI performance isn’t linear—it follows a threshold pattern with significant performance cliffs. A cooling system that handles 95% of the thermal load might maintain near-optimal performance, while one handling 90% could see dramatic performance degradation due to the non-linear nature of thermal throttling algorithms. This “thermal cliff” effect means that slightly undersized cooling can have disproportionately negative performance impacts, fundamentally changing the cost-benefit analysis of cooling investments.

The Evolution of GPU TDP for AI Workloads

The thermal characteristics of AI-focused GPUs have evolved dramatically, reflecting the increasing computational demands of advanced AI models.

Problem: GPU TDP has increased substantially with each generation, creating escalating cooling challenges.

The thermal output of AI-focused GPUs has grown from around 250W to over 700W in just a few years, with future generations potentially exceeding 1000W. This rapid escalation creates significant challenges for cooling infrastructure that must evolve accordingly.

Aggravation: Many data centers and AI infrastructure were designed for lower power densities, creating compatibility challenges with newer GPUs.

Further complicating matters, existing facilities and cooling systems designed for previous GPU generations often cannot accommodate the thermal output of the latest AI accelerators without significant modifications or reduced deployment density.

Solution: Understanding the historical trajectory and future projections of GPU TDP enables more effective long-term infrastructure planning:

Historical TDP Progression

Tracking the evolution of GPU thermal characteristics provides important context:

- Early AI GPU Era (2016-2018):

- NVIDIA Pascal Architecture (P100): 250W TDP

- AMD Vega Architecture: 300W TDP

- Typical server accommodated 8 GPUs per node

- Air cooling sufficient for most deployments

- Rack power density: 10-15kW typical

- Middle AI GPU Era (2019-2021):

- NVIDIA Volta/Ampere (V100/A100): 300-400W TDP

- AMD MI100 Series: 300W TDP

- Server density reduced to 4-8 GPUs per node

- Transition to liquid cooling for high-density deployments

- Rack power density: 15-30kW typical

- Current AI GPU Era (2022-2024):

- NVIDIA Hopper Architecture (H100): 350-700W TDP

- AMD MI250/MI300: 500-750W TDP

- Server density further reduced to 2-8 GPUs per node

- Advanced cooling mandatory for full performance

- Rack power density: 30-80kW typical

Here’s what makes this fascinating: The TDP growth rate of AI GPUs has significantly outpaced Moore’s Law. While traditional computing hardware typically sees 15-20% power increases per generation, AI accelerators have experienced 50-100% TDP increases across recent generations. This accelerated thermal evolution reflects a fundamental shift in design philosophy, where performance is prioritized even at the cost of significantly higher power consumption and thermal output.

Architectural Factors Driving TDP Increases

Understanding the technical drivers behind rising GPU thermal output:

- Transistor Count Scaling:

- Early AI GPUs: 15-20 billion transistors

- Current AI GPUs: 80-100+ billion transistors

- Increased computational capabilities

- Higher base power requirements

- Greater leakage current contribution

- Memory Subsystem Evolution:

- HBM memory integration increasing thermal output

- Wider memory buses requiring more power

- Higher memory bandwidth driving energy consumption

- Memory now contributing 15-25% of total TDP

- Cooling solutions must address memory thermal needs

- Specialized AI Acceleration Units:

- Tensor cores and matrix multiplication units

- Specialized data paths for AI operations

- Higher utilization rates during AI workloads

- Increased power density in specific chip regions

- Creates challenging thermal hotspots

But here’s an interesting phenomenon: The relationship between computational performance and TDP has actually improved despite rising absolute power levels. Modern AI GPUs deliver more operations per watt than their predecessors, but this efficiency improvement has been overwhelmed by the dramatic increase in total computational capabilities. This creates a situation where GPUs are simultaneously more energy-efficient and more thermally challenging—a paradox that reflects the prioritization of absolute performance over power efficiency in AI hardware design.

Workload-Specific TDP Considerations

Different AI workloads create varying thermal profiles:

- Training vs. Inference Thermal Profiles:

- Training: Sustained maximum power draw (90-100% TDP)

- Inference: Variable power draw (40-80% TDP)

- Training creates more challenging cooling requirements

- Inference allows more deployment flexibility

- Cooling design typically prioritizes training needs

- Model Size Impact on Thermal Behavior:

- Larger models utilize more GPU resources

- Higher sustained utilization across GPU functional units

- Greater memory traffic and associated power draw

- Reduced opportunity for dynamic power management

- Increasingly challenging thermal conditions

- Domain-Specific Thermal Characteristics:

- Computer vision: High tensor core utilization

- Natural language processing: Memory-intensive operations

- Recommendation systems: Variable resource utilization

- Scientific computing: High FP64 unit utilization

- Different thermal hotspot patterns by workload type

AI Workload Thermal Characteristics

| Workload Type | Power Profile | Utilization Pattern | Cooling Challenge | Typical TDP Percentage |

|---|---|---|---|---|

| Large Model Training | Sustained maximum | Consistent, long-duration | Very High | 95-105% of TDP |

| Small Model Training | Sustained high | Consistent, medium-duration | High | 85-95% of TDP |

| Batch Inference | Cyclical high | Predictable variation | Medium-High | 70-90% of TDP |

| Real-time Inference | Variable | Unpredictable spikes | Medium | 40-80% of TDP |

| Mixed Workloads | Highly variable | Complex patterns | Medium-High | 60-90% of TDP |

Future TDP Projections

Anticipating the thermal characteristics of next-generation AI accelerators:

- Near-Term Projections (1-2 Years):

- Next-gen NVIDIA architecture: 600-1000W TDP

- Next-gen AMD architecture: 600-900W TDP

- Emerging AI-specific accelerators: 400-800W TDP

- Continued increase in memory subsystem contribution

- Further specialization of AI-specific functional units

- Medium-Term Trends (3-5 Years):

- Potential plateau at 800-1200W per accelerator

- Physical and practical cooling limitations

- Possible shift to multi-chip modules with distributed thermal load

- Increased integration of cooling solutions with chip design

- Greater emphasis on architectural efficiency improvements

- Long-Term Possibilities (5+ Years):

- Alternative computing paradigms (neuromorphic, photonic)

- Fundamental architectural rethinking for efficiency

- Potential TDP reductions through specialized designs

- Integration of cooling directly into chip manufacturing

- Possible divergence between training and inference hardware

Ready for the fascinating part? We are approaching fundamental physical limits in air cooling capabilities for single devices. The laws of thermodynamics and practical airflow constraints create effective cooling limits around 350-400W for air-cooled devices, which current AI accelerators have already exceeded. This physical reality is driving a comprehensive rethinking of both chip design and cooling approaches, potentially leading to more distributed architectures that spread thermal load across multiple smaller chips rather than continuing to increase monolithic device TDP.

Cooling Technologies for Different TDP Profiles

The appropriate cooling technology for AI GPUs depends significantly on their TDP characteristics and deployment context.

Problem: Different TDP profiles require fundamentally different cooling approaches to maintain optimal performance.

The wide range of GPU thermal outputs—from 250W to 700W+ in current generations—means that no single cooling approach is optimal across all scenarios, creating complexity in system design and deployment.

Aggravation: The rapid evolution of GPU TDP outpaces the adaptation of cooling infrastructure, creating mismatches between hardware and cooling capabilities.

Further complicating matters, organizations often attempt to deploy new, higher-TDP GPUs in cooling environments designed for previous generations, leading to thermal throttling, reduced performance, and potential reliability issues.

Solution: Matching cooling technologies to specific TDP profiles enables optimal performance and operational efficiency:

Air Cooling Capabilities and Limitations

Understanding the practical boundaries of air cooling for AI accelerators:

- Effective TDP Range for Air Cooling:

- Optimal performance up to 250-300W TDP

- Borderline capability at 300-350W TDP

- Significant limitations above 350W TDP

- Practical upper limit around 400W with specialized solutions

- Not recommended for latest high-TDP AI accelerators

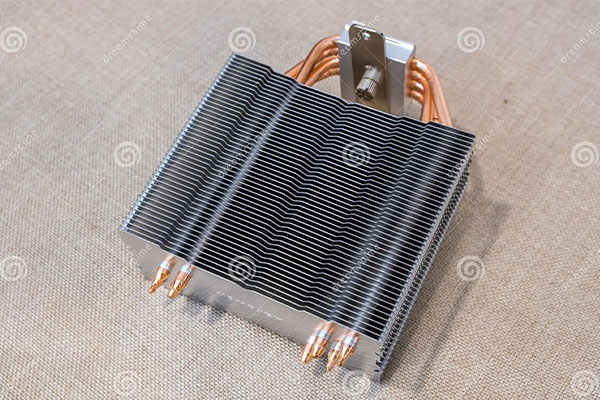

- Advanced Air Cooling Approaches:

- Heat pipe and vapor chamber technologies

- High-performance thermal interface materials

- Optimized fin designs and airflow patterns

- Push-pull fan configurations

- Ducted airflow management

- Deployment Density Considerations:

- Air-cooled 250W GPUs: Up to 8 per 2U server

- Air-cooled 350W GPUs: Up to 4-6 per 2U server

- Air-cooled 400W+ GPUs: Maximum 2-4 per 2U server

- Rack density limitations: 15-25kW typical maximum

- Significant spacing requirements between components

Here’s what makes this fascinating: The efficiency of air cooling decreases non-linearly as TDP increases. While air cooling a 250W GPU might be 90-95% efficient in terms of thermal transfer, this efficiency can drop to 70-80% for a 350W GPU and below 60% for 400W+ devices. This diminishing efficiency creates a practical ceiling where adding more airflow or larger heatsinks yields diminishing returns, fundamentally limiting the viability of air cooling for the highest-TDP AI accelerators.

Liquid Cooling for Medium to High TDP

Liquid cooling offers significant advantages for higher TDP profiles:

- Effective TDP Range for Direct Liquid Cooling:

- Optimal performance from 300W to 700W+ TDP

- Particularly effective for 400-600W range

- Scalable to next-generation 800W+ accelerators

- Enables full performance of current AI GPUs

- Supports high-density deployments

- Implementation Approaches:

- Cold plate direct contact with GPU die/package

- Manifold distribution systems

- Warm-water (facility water) vs. CDU implementations

- Single-phase vs. two-phase liquid cooling

- Hybrid air/liquid approaches for mixed components

- Deployment Density Capabilities:

- Liquid-cooled 350W GPUs: Up to 8 per 2U server

- Liquid-cooled 600W GPUs: Up to 4-8 per 2U server

- Liquid-cooled 800W+ GPUs: Up to 4-6 per 2U server

- Rack density potential: 40-100kW typical

- Significantly reduced spacing requirements

But here’s an interesting phenomenon: The advantage of liquid cooling over air cooling increases non-linearly with TDP. For 250W GPUs, liquid cooling might offer a 20-30% thermal efficiency advantage. For 500W GPUs, this advantage typically grows to 50-70%, and for 700W+ devices, liquid cooling can be 3-5x more effective than even the most advanced air cooling. This expanding advantage creates an economic inflection point where the additional cost of liquid cooling is increasingly justified by the performance benefits as TDP increases.

Immersion Cooling for Extreme TDP Scenarios

Immersion cooling provides the ultimate solution for the highest TDP profiles:

- Effective TDP Range for Immersion Cooling:

- Optimal for deployments with 500W+ GPUs

- Particularly valuable above 700W TDP

- Essentially unlimited upper TDP handling capability

- Future-proof for next several GPU generations

- Enables maximum performance density

- Implementation Variations:

- Single-phase immersion (non-boiling dielectric fluid)

- Two-phase immersion (fluid boiling for heat transfer)

- Open bath vs. sealed tank designs

- Fluid circulation and filtration systems

- Heat rejection approaches and integration

- Deployment Density Advantages:

- Immersion-cooled 600W GPUs: Up to 8-16 per immersion unit

- Immersion-cooled 1000W+ GPUs: Practical with appropriate design

- Rack-equivalent density potential: 100-200kW

- Elimination of internal server fans and heatsinks

- Significantly reduced physical footprint

Cooling Technology Comparison for AI GPU TDP Ranges

| TDP Range | Air Cooling | Liquid Cooling | Immersion Cooling | Recommended Approach |

|---|---|---|---|---|

| 200-300W | Effective | Effective but costly | Effective but excessive | Air cooling with quality heatsinks |

| 300-400W | Borderline | Highly effective | Effective but excessive | Advanced air or entry liquid cooling |

| 400-600W | Inadequate | Highly effective | Very effective | Direct liquid cooling |

| 600-800W | Not viable | Effective | Highly effective | Advanced liquid or immersion cooling |

| 800W+ | Not viable | Borderline | Optimal | Immersion cooling |

Hybrid and Specialized Cooling Approaches

Innovative approaches for specific TDP scenarios:

- Targeted Liquid Cooling:

- Liquid cooling only for GPUs, air for other components

- Simplified implementation compared to full liquid cooling

- Addresses highest TDP components specifically

- Cost-effective middle ground approach

- Suitable for 350-600W GPU TDP range

- Rear Door Heat Exchangers:

- Water-cooled door added to standard racks

- Captures and removes heat at rack level

- Compatible with internal air cooling

- Increases air cooling viable range to 350-450W

- Relatively simple facility integration

- Direct-to-Chip Two-Phase Cooling:

- Refrigerant delivered directly to GPU

- Phase change provides exceptional heat transfer

- Compact implementation compared to liquid cooling

- Effective for 400-800W+ TDP range

- Emerging technology with growing adoption

Ready for the fascinating part? The most effective cooling approach often varies not just by TDP but by deployment scale. For small clusters (4-16 GPUs), the implementation complexity of advanced cooling may outweigh the performance benefits even for high-TDP devices. For large-scale deployments (100+ GPUs), the economies of scale fundamentally change the cost-benefit analysis, making advanced cooling economically advantageous even for moderate TDP profiles. This “scale effect” means that optimal cooling technology selection depends not just on the GPU specifications but on the overall deployment size and organizational context.

System-Level Thermal Considerations

Effective thermal management requires looking beyond individual GPU TDP to consider system-level interactions and holistic design approaches.

Problem: Focusing exclusively on GPU TDP overlooks critical system-level thermal interactions that affect overall performance.

Many cooling solutions address GPU thermal management in isolation, neglecting the complex thermal interactions between multiple GPUs, CPUs, memory, and power delivery components within a system.

Aggravation: High-density AI systems create compound thermal challenges that exceed the sum of individual component TDPs.

Further complicating matters, the close proximity of multiple high-TDP components in modern AI servers creates thermal interactions where components affect each other’s cooling effectiveness, potentially leading to unexpected hotspots and performance limitations.

Solution: A holistic approach to thermal design that considers all system components and their interactions enables optimal performance and reliability:

Multi-GPU Thermal Dynamics

Understanding the thermal interactions in multi-GPU systems:

- Thermal Coupling Effects:

- Heat transfer between adjacent GPUs

- Cumulative impact on ambient temperature

- Airflow or liquid flow sharing between devices

- Thermal gradient development across GPU array

- Potential for cascading thermal issues

- Cooling Resource Distribution:

- Balanced vs. unbalanced cooling allocation

- Serial vs. parallel cooling configurations

- Flow rate and pressure considerations

- Temperature rise across sequential devices

- Ensuring adequate cooling for all positions

- Workload Distribution Impacts:

- Thermal implications of workload scheduling

- Load balancing for thermal optimization

- Thermal-aware job placement

- Synchronous vs. asynchronous operation effects

- Potential for thermally-induced performance imbalance

Here’s what makes this fascinating: In multi-GPU systems, the thermal behavior is not simply the sum of individual GPU characteristics. Research shows that in typical 8-GPU servers, the GPUs in central positions can run 10-15°C hotter than those at the ends of the array when using identical workloads. This “thermal position effect” means that system design must account for worst-case positions rather than average thermal conditions, often requiring 20-30% additional cooling capacity beyond what simple TDP summation would suggest.

Whole System Power and Thermal Budgeting

Considering the complete thermal profile of AI systems:

- Component TDP Contributions:

- GPUs: Typically 70-85% of system thermal output

- CPUs: 5-15% of system thermal output

- Memory: 3-8% of system thermal output

- Power delivery: 5-10% of system thermal output

- Networking and storage: 2-5% of system thermal output

- Power Delivery Thermal Considerations:

- VRM efficiency and heat generation

- Power conversion losses

- Cable and connector thermal limitations

- Power delivery cooling requirements

- Thermal feedback effects on power efficiency

- System-Level Thermal Constraints:

- Chassis airflow or liquid flow limitations

- Ambient temperature assumptions

- Altitude considerations

- Acoustic constraints

- Reliability and lifespan targets

But here’s an interesting phenomenon: The thermal contribution of non-GPU components increases in relative importance as GPU cooling improves. When GPUs are inadequately cooled, they dominate the thermal profile. With advanced GPU cooling, previously secondary heat sources like power delivery systems and memory can become limiting factors. Some organizations have found that after implementing liquid cooling for GPUs, power delivery components became the primary thermal constraint, requiring additional targeted cooling solutions to achieve optimal system performance.

Facility Integration Considerations

Connecting system thermal management to facility infrastructure:

- Heat Rejection Requirements:

- Total thermal load calculation

- Peak vs. average heat rejection needs

- Redundancy and backup considerations

- Seasonal variation planning

- Growth and expansion accommodation

- Cooling Distribution Architecture:

- Centralized vs. distributed approaches

- Primary/secondary loop designs

- Temperature and flow specifications

- Monitoring and control integration

- Maintenance and serviceability planning

- Environmental Condition Management:

- Temperature setpoints and tolerances

- Humidity control requirements

- Airflow management strategies

- Contamination and filtration considerations

- Thermal stratification prevention

System-Level Thermal Design Considerations

| Consideration | Air Cooling | Liquid Cooling | Immersion Cooling | Critical Factors |

|---|---|---|---|---|

| Multi-GPU Thermal Coupling | Significant | Moderate | Minimal | GPU spacing, airflow patterns |

| Power Delivery Cooling | Challenging | Moderate | Simplified | VRM efficiency, targeted cooling |

| Facility Integration | Straightforward | Moderate complexity | High complexity | Infrastructure compatibility |

| Scalability | Limited | Good | Excellent | Future expansion plans |

| Operational Flexibility | High | Moderate | Limited | Maintenance and service needs |

| Monitoring Requirements | Moderate | High | Very High | Control systems, sensor placement |

Thermal Monitoring and Management

Comprehensive monitoring enables proactive thermal management:

- Sensor Placement Strategy:

- GPU internal temperature sensors

- Memory temperature monitoring

- VRM and power delivery thermal sensing

- Inlet and outlet temperature measurement

- Ambient and facility condition monitoring

- Control System Approaches:

- Reactive vs. predictive thermal management

- Dynamic fan or pump speed control

- Workload throttling and scheduling

- Thermal-aware resource allocation

- Emergency response automation

- Operational Management Practices:

- Regular thermal performance assessment

- Trend analysis and degradation detection

- Preventative maintenance scheduling

- Thermal optimization procedures

- Continuous improvement processes

Ready for the fascinating part? The most advanced AI cooling implementations are beginning to use AI techniques to optimize their own cooling. Machine learning models trained on historical thermal data can predict temperature patterns, optimize cooling resources, and even adjust workload scheduling to maintain optimal thermal conditions. These “self-cooling AI systems” can improve cooling efficiency by 15-30% compared to traditional control approaches while simultaneously enhancing performance stability. This represents a fascinating case of AI technology being applied to solve challenges created by AI hardware itself.

Economic and Operational Implications

The TDP characteristics of AI GPUs have significant economic and operational implications that extend far beyond technical considerations.

Problem: Higher TDP GPUs create substantial economic challenges through increased infrastructure costs and operational expenses.

The escalating thermal output of AI accelerators drives significant increases in cooling infrastructure costs, energy consumption, and operational complexity that must be carefully managed to maintain economic viability.

Aggravation: Organizations often underestimate the full economic impact of high-TDP GPUs when making hardware selection decisions.

Further complicating matters, many organizations focus primarily on the direct acquisition cost of GPUs without adequately accounting for the substantial infrastructure and operational costs associated with cooling high-TDP devices.

Solution: A comprehensive economic analysis that considers all TDP-related costs enables more informed hardware selection and infrastructure planning:

Capital Expenditure Implications

Understanding how GPU TDP affects infrastructure investments:

- Cooling Infrastructure Scaling:

- 250W GPU: $500-1,500 per GPU cooling cost

- 400W GPU: $1,000-3,000 per GPU cooling cost

- 700W GPU: $2,000-5,000 per GPU cooling cost

- Exponential rather than linear cost scaling

- Significant impact on total deployment cost

- Facility Infrastructure Requirements:

- Power distribution upgrades

- Heat rejection system scaling

- Space allocation considerations

- Structural reinforcement needs

- Monitoring and management systems

- Deployment Density Economics:

- Lower-TDP GPUs: Higher density, lower per-GPU infrastructure cost

- Higher-TDP GPUs: Lower density, higher per-GPU infrastructure cost

- Facility space utilization efficiency

- Infrastructure amortization considerations

- Total cost of ownership implications

Here’s what makes this fascinating: The relationship between GPU TDP and total infrastructure cost is non-linear. Research indicates that doubling GPU TDP typically increases cooling infrastructure costs by 2.5-3.5x rather than just 2x. This exponential cost relationship creates economic inflection points where slightly lower-performance, lower-TDP GPUs may offer better overall value than maximum-performance options when total infrastructure costs are considered.

Operational Expenditure Considerations

Ongoing costs significantly impacted by GPU TDP:

- Energy Consumption Economics:

- Direct GPU power consumption

- Cooling energy requirements (30-40% of GPU energy)

- Power conversion losses

- Facility overhead energy use

- Total energy cost per GPU hour

- Cooling System Operational Costs:

- Maintenance and service requirements

- Consumable replacement (filters, fluids)

- Specialized expertise needs

- Monitoring and management overhead

- System lifespan and replacement cycles

- Reliability and Availability Impacts:

- Higher TDP correlation with failure rates

- Thermal-related downtime risk

- Maintenance frequency requirements

- Component lifespan reduction

- Business continuity considerations

But here’s an interesting phenomenon: The operational cost differential between lower and higher TDP GPUs varies dramatically based on energy costs and utilization patterns. In regions with low electricity costs ($0.05-0.08/kWh), the operational cost premium for high-TDP GPUs might be 30-50%. In high-cost energy regions ($0.20-0.30/kWh), this premium can reach 100-150%, fundamentally changing the economic equation. This “energy cost multiplier” means that optimal GPU selection should vary significantly based on deployment location and local energy economics.

Performance Economics and ROI

Balancing TDP-related costs against performance benefits:

- Performance per Watt Considerations:

- Computational output per unit of power

- Energy efficiency across different GPU options

- Workload-specific efficiency variations

- Performance scaling vs. power scaling

- Total cost per training run or inference

- Utilization and Productivity Factors:

- Higher TDP often enables higher absolute performance

- Faster job completion and higher throughput

- Resource utilization efficiency

- Development and research velocity

- Time-to-market and competitive advantages

- Total Value Assessment:

- Hardware and infrastructure costs

- Operational expenses over system lifetime

- Performance and productivity benefits

- Risk and reliability considerations

- Strategic and competitive factors

Economic Impact of GPU TDP Selection

| TDP Range | Relative Acquisition Cost | Infrastructure Premium | Operational Cost Impact | Performance Benefit | Best For |

|---|---|---|---|---|---|

| 200-300W | Baseline | Baseline | Baseline | Baseline | Cost-sensitive, scale-out deployments |

| 300-400W | 1.3-1.8x | 1.5-2.0x | 1.3-1.6x | 1.3-1.5x | Balanced performance/cost requirements |

| 400-600W | 1.8-2.5x | 2.0-3.0x | 1.6-2.0x | 1.5-1.8x | Performance-prioritized applications |

| 600-800W | 2.5-4.0x | 3.0-5.0x | 2.0-3.0x | 1.8-2.2x | Maximum performance requirements |

Strategic Decision Frameworks

Structured approaches to GPU TDP-based selection:

- Workload-Based Selection Criteria:

- Training-focused: Higher TDP often justified

- Inference-focused: Lower TDP often more economical

- Research and development: Performance priority

- Production deployment: Efficiency priority

- Mixed workloads: Balanced approach

- Scale-Based Considerations:

- Small deployments: Infrastructure overhead more significant

- Large deployments: Economies of scale for cooling

- Growth planning implications

- Incremental deployment strategies

- Technology refresh cycle planning

- Risk and Flexibility Factors:

- Future TDP growth expectations

- Technology obsolescence considerations

- Vendor roadmap alignment

- Market and competitive dynamics

- Organizational strategy and priorities

Ready for the fascinating part? The most sophisticated organizations are implementing “TDP portfolio strategies” rather than standardizing on a single GPU type. By deploying a mix of high-TDP GPUs for performance-critical workloads and lower-TDP options for efficiency-focused applications, these organizations can optimize both performance and economics. Some have found that a carefully balanced portfolio approach can improve overall price-performance by 20-40% compared to homogeneous deployments, while simultaneously providing greater flexibility to adapt to evolving requirements.

Future Trends in GPU Thermal Design

The thermal characteristics of AI accelerators continue to evolve rapidly, with several emerging trends poised to reshape TDP considerations.

Problem: Current trajectories suggest GPU TDP will continue increasing, potentially exceeding practical cooling capabilities.

If current trends continue, next-generation AI accelerators could reach TDPs of 1000W or more, approaching fundamental limits of practical cooling technologies and infrastructure capabilities.

Aggravation: The performance demands driving TDP increases show no signs of abating as AI models continue to grow in size and complexity.

Further complicating matters, the computational requirements of advanced AI models continue to grow exponentially, creating relentless pressure for increased GPU performance even at the cost of higher power consumption and thermal output.

Solution: Understanding emerging trends in GPU thermal design enables more forward-looking infrastructure planning and technology selection:

Architectural Evolution for Thermal Efficiency

New approaches to GPU architecture addressing thermal challenges:

- Chiplet and Multi-Die Approaches:

- Disaggregation of monolithic GPUs into multiple smaller dies

- Distributed thermal load across larger area

- Reduced hotspot intensity

- Improved cooling efficiency

- Potential for heterogeneous integration

- 3D Stacking and Advanced Packaging:

- Vertical integration of GPU components

- Increased cooling challenges from stacked dies

- Integrated cooling layers and thermal vias

- New thermal interface materials and approaches

- Fundamental changes to cooling system requirements

- Specialized AI Architectures:

- Purpose-built AI accelerators vs. general GPUs

- Optimized circuits for specific operations

- Reduced power for equivalent performance

- Workload-specific efficiency improvements

- Potential TDP reductions through specialization

Here’s what makes this fascinating: The physical limits of monolithic chip scaling are driving a fundamental architectural shift toward disaggregated designs. Rather than continuing to increase the size and power of single GPU dies, manufacturers are exploring “GPU disaggregation” where multiple smaller chips work together as a logical unit. This approach could potentially flatten or even reverse the TDP growth trend by distributing heat generation across larger areas with more efficient cooling access, fundamentally changing the thermal management challenge.

Integration of Cooling and Computing

The boundary between computing hardware and cooling systems is increasingly blurring:

- Co-Designed Systems:

- Cooling designed simultaneously with computing hardware

- Optimized interfaces between chips and cooling

- Purpose-built cooling for specific GPU architectures

- Thermal considerations influencing chip design

- Unified thermal-computational optimization

- Embedded Cooling Technologies:

- Microfluidic channels integrated into chip packages

- On-die cooling structures

- Advanced thermal interface materials

- 3D-stacked chips with interlayer cooling

- Cooling as an integral part of the chip

- Heterogeneous Integration Impacts:

- Chiplet architectures with distributed cooling

- Interposer-level cooling integration

- 3D stacking thermal management

- Advanced packaging with integrated cooling

- System-in-package thermal solutions

But here’s an interesting phenomenon: The next generation of AI hardware is being designed with cooling as a primary consideration rather than an afterthought. This represents a fundamental shift in computing architecture philosophy. For example, several major hardware manufacturers are now including cooling engineers in the earliest stages of chip design, allowing thermal considerations to influence fundamental architecture decisions. This co-design approach could potentially double cooling efficiency compared to retrofitted solutions, enabling significant performance improvements while maintaining manageable thermal profiles.

Novel Materials and Approaches

Innovative materials and physical approaches expanding cooling capabilities:

- Advanced Material Applications:

- Diamond heat spreaders (2000+ W/m·K conductivity)

- Graphene thermal interfaces (5000+ W/m·K in-plane)

- Carbon nanotube arrays for thermal interfaces

- Phase change materials for transient loads

- Metamaterials with engineered thermal properties

- Nanoscale Thermal Management:

- Phononic crystal structures

- Surface acoustic wave cooling

- Near-field radiation heat transfer

- Quantum thermal transport

- Molecular-engineered thermal interfaces

- Biological Inspiration:

- Biomimetic vascular cooling networks

- Self-organizing flow patterns

- Adaptive surface structures

- Hierarchical branching systems

- Self-healing thermal interfaces

Future GPU Thermal Design Trends

| Trend Category | Current Status | Potential Impact | Timeline | Adoption Challenges |

|---|---|---|---|---|

| Chiplet Architecture | Early commercial | Transformative | 1-3 years | Software, interconnect |

| Integrated Cooling | Advanced R&D | Revolutionary | 3-5 years | Manufacturing, reliability |

| Advanced Materials | Early adoption | Significant | 1-3 years | Cost, supply chain |

| Alternative Computing | Research | Transformative | 5-10 years | Ecosystem, compatibility |

| AI-Optimized Cooling | Early commercial | Substantial | 1-2 years | Complexity, expertise |

| Sustainable Approaches | Growing adoption | Moderate | 1-3 years | Economics, standards |

Sustainability and Efficiency Imperatives

Environmental considerations increasingly shaping thermal design:

- Energy Efficiency Focus:

- Performance per watt prioritization

- Carbon footprint considerations

- Regulatory compliance requirements

- Corporate sustainability commitments

- Economic pressures from energy costs

- Heat Reuse and Recovery:

- Waste heat utilization technologies

- Higher-grade heat from higher TDP GPUs

- Facility heating and hot water production

- Industrial process heat applications

- Energy recapture and conversion

- Circular Economy Approaches:

- Design for longevity and repairability

- Cooling system material selection

- End-of-life considerations

- Reduced resource consumption

- Lifecycle environmental impact

Ready for the fascinating part? The environmental impact of AI computing is creating unprecedented pressure for thermal efficiency improvements. Some organizations are now implementing “carbon-aware computing” where workloads are scheduled based on both computational needs and environmental impact. This approach can include shifting non-time-sensitive training to periods of lower carbon intensity electricity, geographic distribution of workloads to minimize cooling energy, and dynamic TDP management based on carbon impact. These sophisticated approaches can reduce the effective carbon footprint of AI workloads by 30-50% while maintaining performance, fundamentally changing the relationship between computing performance and environmental impact.

Frequently Asked Questions

Q1: How should organizations balance GPU performance against TDP considerations when selecting hardware for AI workloads?

Balancing GPU performance against TDP requires a systematic approach: First, analyze your specific workload characteristics—different AI applications have varying sensitivity to raw performance versus efficiency. Training large models typically justifies higher-TDP GPUs despite increased infrastructure costs, while inference workloads often achieve better economics with more efficient, lower-TDP options. Second, evaluate your infrastructure constraints—existing cooling capabilities, power availability, and space limitations may establish practical TDP ceilings regardless of performance benefits. Third, calculate total cost of ownership rather than focusing solely on acquisition costs—include cooling infrastructure, energy expenses, and operational considerations over the expected hardware lifetime. Fourth, consider your deployment scale—larger deployments can better amortize the infrastructure costs of high-TDP GPUs, while smaller deployments may find the overhead prohibitive. Fifth, assess your performance priorities—time-sensitive applications with direct business impact may justify premium cooling investments, while research or background processing might prioritize efficiency. The optimal balance varies significantly based on organizational context—there is no universal answer. Many organizations find that a mixed approach with different GPU types for different workloads provides the best overall economics and performance. This “TDP portfolio” strategy allows targeted application of high-TDP accelerators where their performance justifies the additional costs while using more efficient options for less demanding workloads.

Q2: What are the most common cooling-related mistakes organizations make when deploying high-TDP AI accelerators?

The most common cooling-related mistakes with high-TDP AI accelerators, ranked by frequency and impact: First, underestimating total thermal load—many organizations calculate cooling requirements based on nominal TDP values without accounting for potential excursions above TDP during peak operations or the additional heat from supporting components. Second, neglecting system-level thermal interactions—cooling designed for individual GPUs often fails to address the compound effects of multiple high-TDP devices operating in close proximity. Third, inadequate monitoring and instrumentation—many deployments lack sufficient temperature sensors and monitoring capabilities to identify developing thermal issues before they impact performance. Fourth, overlooking facility integration requirements—advanced cooling technologies often require significant facility modifications that aren’t adequately planned or budgeted. Fifth, insufficient attention to thermal interface materials—the interface between GPUs and cooling solutions represents a critical thermal bottleneck that can undermine otherwise adequate cooling systems. Sixth, failure to account for environmental variations—cooling systems designed for ideal conditions may prove inadequate during seasonal temperature peaks or facility cooling disruptions. Seventh, neglecting operational procedures—even well-designed cooling systems require appropriate maintenance, monitoring, and emergency response procedures that are often overlooked. Organizations that avoid these common mistakes typically achieve 15-30% better thermal performance from the same hardware and cooling technology, demonstrating that implementation quality can be as important as the cooling technology itself.

Q3: How does the thermal design of AI GPUs affect their reliability and lifespan, and what are the economic implications?

The thermal design of AI GPUs affects reliability and lifespan through multiple mechanisms with significant economic implications: First, operating temperature directly impacts failure rates—research indicates that every 10°C increase approximately doubles component failure rates, meaning that effective cooling can potentially reduce failures by 50-75%. Second, thermal cycling creates mechanical stress—the expansion and contraction from temperature changes stresses solder joints, interconnects, and packaging materials, with more extreme or frequent cycling accelerating degradation. Third, sustained high temperatures accelerate various failure mechanisms—including electromigration, dielectric breakdown, and package delamination, potentially reducing useful lifespan by 30-60%. Fourth, thermal throttling affects not just performance but reliability—frequent throttling events create additional stress on power delivery components and can contribute to premature failures. The economic implications are substantial—for high-value AI accelerators costing $25,000-40,000 each, extending lifespan from 3 years to 4-5 years through superior cooling can create $5,000-15,000 in value per GPU. Additionally, reduced failure rates directly impact operational costs through lower replacement expenses, decreased downtime, and reduced service requirements. For large deployments, these reliability benefits often exceed the direct energy savings from efficient cooling, fundamentally changing the ROI calculation for cooling investments. Organizations increasingly recognize that premium cooling should be viewed not just as a performance enabler but as a critical reliability investment that directly impacts total cost of ownership.

Q4: What facility infrastructure considerations are most important when planning for high-TDP AI accelerators?

When planning facility infrastructure for high-TDP AI accelerators, several critical considerations should guide your approach: First, power capacity and distribution—high-TDP GPUs require not just sufficient total power but appropriate distribution systems, including properly sized PDUs, cabling, and circuit breakers rated for the substantial current requirements. Second, cooling capacity and architecture—facility cooling must accommodate both the total heat load and the high power density, potentially requiring upgrades to chillers, cooling towers, or the implementation of specialized high-density cooling zones. Third, space and floor loading—advanced cooling for high-TDP accelerators often requires more space per rack and may substantially increase weight, potentially exceeding standard floor loading limits. Fourth, liquid infrastructure requirements—if implementing liquid cooling, facilities need appropriate water distribution, treatment systems, leak detection, and potentially secondary loops to isolate facility water from IT equipment. Fifth, environmental controls—high-density AI clusters create challenges for airflow management, humidity control, and temperature stability that may require enhanced environmental monitoring and control systems. Sixth, redundancy and reliability considerations—as cooling becomes more critical for performance and reliability, appropriate redundancy in cooling systems becomes essential, potentially including backup pumps, chillers, and power systems for cooling infrastructure. The most successful facility implementations typically involve collaborative planning between IT, facilities, and cooling vendors from the earliest stages, with particular attention to future scaling requirements. This integrated approach can reduce implementation costs by 15-30% while creating more adaptable infrastructure compared to siloed planning processes.

Q5: How should organizations prepare their cooling infrastructure for future generations of AI accelerators with potentially higher TDP?

Organizations should prepare for future AI accelerator TDP increases through several strategic approaches: First, implement modular and scalable cooling—design systems with standardized interfaces and the ability to incrementally upgrade capacity without complete replacement as requirements evolve. Second, build in substantial headroom—when designing new infrastructure, plan for 2-3x current maximum TDP to accommodate future generations without fundamental rebuilding. Third, create cooling zones with varying capabilities—designate specific areas for highest-density deployment with premium cooling, allowing targeted infrastructure investment where most needed. Fourth, establish clear upgrade paths—develop explicit plans for how cooling will evolve through multiple hardware generations, including trigger points for technology transitions. Fifth, invest in comprehensive monitoring—deploy detailed thermal and power monitoring to understand current limitations and identify emerging bottlenecks before they become critical. Sixth, develop internal expertise—build knowledge and capabilities around advanced cooling technologies before they become critical requirements. The most forward-thinking organizations are implementing “cooling as a service” approaches internally, where cooling is treated as a dynamic, upgradable resource rather than fixed infrastructure. This approach typically involves standardized interfaces between computing hardware and cooling systems, modular components that can be incrementally upgraded, and sophisticated management systems that optimize across multiple cooling technologies. This flexible, service-oriented approach to cooling infrastructure provides the greatest adaptability to the rapidly evolving AI hardware landscape, allowing organizations to incorporate new cooling technologies as they emerge without requiring complete system replacements.