pengenalan

Server heatsinks are a critical component of modern data center infrastructure—especially in AI-driven, high-density computing environments. As artificial intelligence evolves rapidly, data centers are facing unprecedented thermal challenges, and traditional cooling methods are falling short. In this article, we’ll explore the evolution of server heatsinks, the latest technologies, and how they enable dense AI workloads in modern data centers.

Jadual Kandungan

- How AI Is Changing Data Center Cooling Demands

- Modern Server Heatsink Types and Technologies

- Thermal Challenges and Solutions in High-Density Deployments

- Economic Impact of Data Center Cooling Efficiency

- Future Directions for Server Cooling Technologies

- Soalan Lazim

1. How AI Is Changing Data Center Cooling Demands

The rise of AI is reshaping data center design and operations. While traditional data centers handled general-purpose computing, modern AI data centers must support extremely dense compute environments, leading to new thermal challenges.

The Issue: AI workloads are pushing cooling systems beyond previous limits.

Picture this: traditional racks operated at 5–10 kW. AI training servers now hit 30–50 kW or more—largely due to GPU-heavy workloads.

A single AI server might house 8+ high-end GPUs, each with TDPs between 300–700W. This means just the GPUs generate 2.4–5.6 kW of heat, and with CPUs, memory, and other components, a 2U or 4U server could exceed 6–8 kW total.

The Risk: Inadequate cooling causes performance throttling, higher failure rates, and excessive operational costs.

Every 10°C temperature increase doubles the failure rate of electronics. In AI data centers worth millions, that’s unacceptable. Cooling systems may consume 40%+ of total power—inefficiency affects both costs and compute density.

The Solution: Server cooling systems are undergoing a major technological revolution.

From traditional air to advanced liquid cooling, innovations are improving thermal efficiency, reducing costs, and supporting more sustainable operations.

Impact of AI on Data Center Cooling

| Aspect | Traditional DC | AI Data Center | Scale Increase |

|---|---|---|---|

| Rack Power Density | 5–10 kW | 30–50+ kW | 3–10× |

| Single Server Heat Output | 1–2 kW | 6–8+ kW | 3–8× |

| Coolant Flow Requirement | Low | High | 3–5× |

| Hotspot Complexity | Low | Very High | 5–10× |

| PUE Targets | 1.5–1.8 | 1.1–1.3 | 25–40% better |

Paradigm Shift in Data Center Design

- From Homogeneous to Heterogeneous: Traditional systems were uniform. AI centers use diverse CPUs, GPUs, and accelerators with varying thermal profiles.

- From Static to Dynamic: AI loads fluctuate rapidly, requiring cooling systems to adapt in real time.

- From Centralized to Distributed: Cooling is shifting from central CRAC/CRAH units to localized solutions closer to the heat source.

Interestingly, this is also driving organizational change—IT and facilities teams must now collaborate closely to balance compute performance with cooling efficiency.

2. Modern Server Heatsink Types and Technologies

Server cooling has evolved from simple fans and heatsinks to sophisticated thermal management systems.

The Issue: Different cooling methods come with trade-offs. Choosing poorly impacts performance, cost, and scalability.

The Challenge: As server TDPs rise, traditional air solutions approach their physical limits—especially in dense AI clusters.

The Solution: Know your options—match cooling methods to specific needs.

Air Cooling Systems

Still the most common, but with advanced variants:

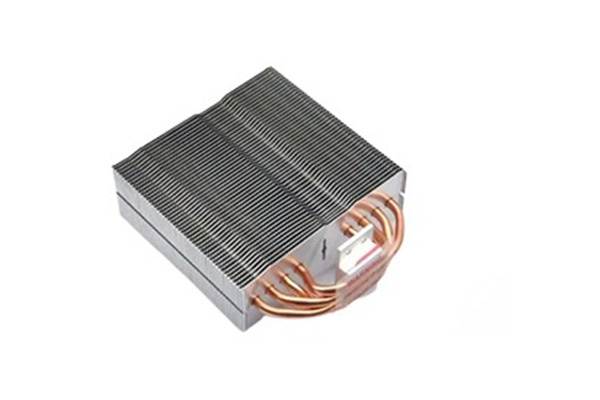

- Conventional Air Cooling:

- Heatsinks + fans

- Suitable for <15 kW per rack

- Works via heat conduction + airflow

- Heat Pipe Assisted Air Cooling:

- Copper tubes with working fluid

- Uses phase change for efficient heat transfer

- Effective up to 25 kW per rack

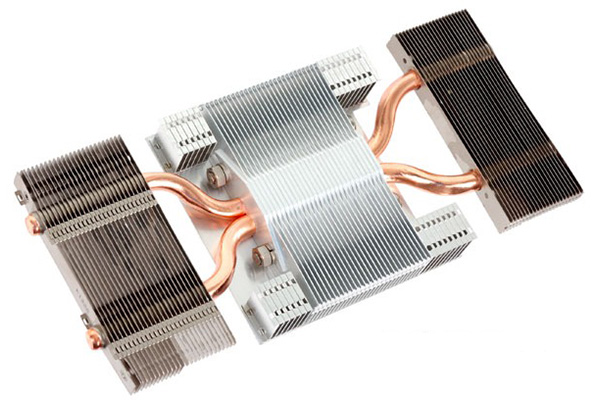

- Vapor Chamber Cooling:

- Flat sealed chamber replaces heat pipes

- More surface area, better for hotspots

- Good for high-performance servers

Limitation: Air has poor heat capacity—efficiency plateaus in dense setups.

Liquid Cooling Systems

Leverages 3,500–4,000× higher thermal capacity than air:

- Direct-to-Chip Cold Plate Cooling:

- Liquid contacts CPU/GPU via cold plates

- Removes heat through sealed loop

- Handles 30–60 kW per rack

- Retrofit-friendly for existing facilities

- Immersion Cooling:

- Entire servers submerged in dielectric fluid

- Eliminates hotspots

- Supports 100 kW+ per rack

- Requires custom server design

- Two-Phase Immersion:

- Fluid evaporates at heat source

- Condenses and recycles

- Highest thermal performance and uniformity

Cooling Technology Comparison

| Cooling Tech | Cooling Capacity | Deployment Complexity | CapEx | OpEx | Ideal Use |

|---|---|---|---|---|---|

| Air Cooling | Low | Low | Low | High | General-purpose servers |

| Heat Pipe Air | Medium | Low | Med–Low | Med–High | Performance servers |

| Cold Plate Liquid | High | Medium | Med–High | Medium | AI training clusters |

| Immersion Cooling | Very High | High | High | Low | Dense AI data centers |

| Two-Phase Immersion | Extremely High | High | Very High | Very Low | Hyperscale AI workloads |

Hybrid Cooling Methods

Many data centers now combine systems:

- Selective Liquid Cooling:

- Liquids for GPUs only, air for other parts

- Balances performance and cost

- Zonal Cooling:

- Different zones use different cooling methods

- Liquid for dense AI, air for low-density racks

- Supplemental Cooling:

- Add liquid to existing air systems

- Smooth migration to high-density deployments

The Best Part? Hybrid systems offer upgrade paths without full rebuilds—perfect for traditional data centers adding AI workloads.

3. Thermal Challenges and Solutions in High-Density Deployments

High-density AI server deployments bring a unique set of thermal challenges that require innovative solutions to maintain performance, reliability, and efficiency.

The Issue: Traditional designs can’t handle the extreme heat generated in dense AI clusters.

The Risk: Overheating leads to hotspots, thermal runaway, unstable temps, and hardware degradation—compounded at scale.

The Solution: A multi-layered approach to cooling is required:

Rack-Level Cooling Optimization

Design and layout directly impact cooling effectiveness:

- Airflow Optimization:

- Isolate hot/cold aisles

- Use blanking panels to eliminate recirculation

- Cable management to improve airflow paths

- Integrated Rack Cooling:

- Rear-door heat exchangers add cooling capacity

- In-rack CDU (Coolant Distribution Units)

- Chimney exhaust to ceiling return vents

- Smart Rack Layouts:

- Space out servers for better distribution

- Position racks by power load

- Use short racks to reduce vertical thermal gradients

Bonus: Rack-level intelligence can monitor temps, control fans, or throttle compute to maintain thermal safety.

Liquid Cooling Infrastructure Considerations

Planning is critical for successful liquid cooling at scale:

- Cooling Architecture:

- Centralized vs. distributed CDUs

- Redundancy for uptime

- Modular scalability for future growth

- Tubing & Connectors:

- Leak-proof quick disconnects

- Flexible lines to absorb vibration

- Sized properly to reduce pressure loss

- Coolant Selection:

- High thermal conductivity and heat capacity

- Non-toxic, non-corrosive, stable

- Maintenance-friendly and safe

Cooling Solutions for Dense Deployments

| Solution | Cooling Capacity | Implementation Difficulty | Space Efficiency | Energy Efficiency | Scalability |

|---|---|---|---|---|---|

| Optimized Air + Aisle Containment | Medium | Low | Medium | Medium | Low |

| Rear-Door Heat Exchangers | Med–High | Medium | High | Med–High | Medium |

| Row-Based Cooling | High | Medium | High | High | Medium |

| Direct Liquid (Cold Plate) | Very High | High | Very High | Very High | High |

| Immersion Cooling | Extremely High | Very High | Extremely High | Extremely High | Med–High |

Intelligent Thermal Management Systems

Modern cooling depends on smart software as much as hardware:

- Real-Time Monitoring:

- Dense temperature sensor networks

- Thermal cameras for hotspot detection

- Predictive analytics for early alerts

- Dynamic Workload Management:

- Route tasks to cooler areas

- Throttle power in overheated zones

- Balance system load and efficiency

- AI-Driven Cooling Optimization:

- ML algorithms forecast cooling needs

- Adjust parameters in real time

- Reduce power while maintaining safety

Key Insight: The future lies in closed-loop systems that unify IT and cooling for full-stack thermal control.

4. Economic Impact of Data Center Cooling Efficiency

Thermal performance isn’t just technical—it’s financial. Cooling efficiency directly affects TCO (Total Cost of Ownership) and ROI.

The Issue: Cooling may consume 30–40% of total power—and more in AI data centers.

The Risk: Inefficiency leads to wasted energy, short hardware lifespan, and high OpEx—all magnified as power costs and ESG rules rise.

The Solution: Invest in better cooling for long-term returns.

CapEx Breakdown for Cooling Investments

| Teknologi | CapEx per kW Cooling | Pros | Cons |

|---|---|---|---|

| Traditional Air | $2,000–3,000 | Low upfront cost | Limited efficiency/density |

| Direct Liquid | $3,000–5,000 | High density + retrofit friendly | Moderate complexity |

| Immersion Cooling | $4,000–7,000 | Highest density, lowest long-term cost | High CapEx, requires redesign |

Pro Tip: While immersion costs more upfront, it supports much higher compute per square meter—cutting space costs significantly.

OpEx and Energy Efficiency

| Metric | Air Cooling | Optimized Air | Direct Liquid | Immersion |

|---|---|---|---|---|

| PUE | 1.8 | 1.5 | 1.3 | 1.1 |

| 5-Year Energy Cost (10 MW) | $87.6M | $68.0M | $53.3M | $43.8M |

| Maintenance (5 yrs) | $5M | $7M | $10M | $8M |

| Floor Space Cost | $30M | $25M | $18M | $15M |

| Hardware Replacement Cost | $20M | $18M | $15M | $12M |

| 5-Year TCO | $167.6M | $153.0M | $141.3M | $138.8M |

Sustainability & Compliance

Cooling efficiency is key to ESG success:

- Lower Carbon Footprint:

- Energy-efficient systems reduce emissions

- Supports ESG compliance and investor appeal

- Regulatory Readiness:

- Helps meet PUE/carbon caps

- Qualifies for tax breaks, avoids penalties

- Heat Recovery Potential:

- Captured waste heat powers buildings or earns revenue

- Converts OpEx into savings or even new income

5. Future Directions for Server Cooling Technologies

As AI accelerates, cooling must evolve even faster.

The Issue: 1,500W+ AI accelerators may arrive in 5–7 years.

The Risk: Traditional methods hit physical and environmental limits.

Emerging Technologies

| Tech | ETA | Efficiency Gain | Faedah | Challenges |

|---|---|---|---|---|

| On-Chip Liquid Cooling | 2–3 years | 40–60% | Extremely efficient | Manufacturing complexity |

| PCM-Integrated Cooling | 1–2 years | 15–25% | Smooths temp spikes | Limited thermal cycles |

| Jet Impingement Cooling | 2–4 years | 30–50% | Excellent for hotspots | System integration |

| Adaptive Cooling | Now–2 years | 10–20% | Energy-saving automation | Control logic complexity |

| Distributed Cooling | 1–3 years | 15–30% | Scalable, local optimization | Integration challenges |

Sustainability and Circular Cooling

- Heat Reuse:

- Share waste heat with district heating or process use

- Creates new revenue streams

- Eco-Friendly Materials:

- Biodegradable liquids

- Recyclable heatsinks

- Less rare metal dependency

- Closed-Loop Systems:

- Zero water waste

- Minimal chemical discharge

- Circular resource flow

The Upside: Sustainable cooling offers both ecological and financial advantages—especially under rising energy prices and carbon tax pressure.

Soalan Lazim

Q1: What is a server heatsink?

A server heatsink is a system designed to dissipate the heat generated by server components such as CPUs, GPUs, memory, and storage. These systems can range from basic air-cooled heatsinks with fans to advanced liquid and immersion cooling technologies. The main function of a server heatsink is to keep all components within safe temperature limits to prevent overheating, which can lead to performance throttling, hardware failure, or complete system crashes. In modern AI data centers, heatsinks are part of sophisticated thermal management systems built to handle high heat loads while optimizing energy use and spatial efficiency.

Q2: How does AI change the thermal requirements of data centers?

AI dramatically changes cooling demands in three key ways:

- Power Density Surge: AI server racks now demand 30–50 kW or more—up to 10× higher than traditional setups.

- Localized Hotspots: AI accelerators like GPUs produce intense, concentrated heat, requiring precise and localized cooling.

- Sustained Load Patterns: AI training workloads often run continuously at near-peak power, unlike bursty traditional computing tasks.

These changes force a shift toward advanced cooling methods such as direct liquid and immersion cooling, along with redesigned facility layouts for airflow and power distribution.

Q3: What’s the main difference between air and liquid server cooling?

The main difference lies in the heat transfer medium and efficiency:

| Factor | Air Cooling | Liquid Cooling |

|---|---|---|

| Medium | Air | Water or dielectric liquid |

| Thermal Capacity | Low | 3,500–4,000× higher |

| Density Support | Up to ~15 kW per rack | 30–100+ kW per rack |

| Energy Efficiency | Lower (PUE 1.5–1.8) | Higher (PUE 1.1–1.3) |

| Tahap Kebisingan | High | Low (fewer/no fans needed) |

| Space Efficiency | Moderate | High (supports denser layouts) |

| CapEx | Low | Medium–High |

| Maintenance Complexity | Low (simple fans) | Higher (fluid systems, pumps, valves) |

Q4: What are the key cooling challenges in high-density AI deployments?

Key challenges include:

- Extreme Heat Output: Racks may generate 30–50 kW+—well beyond traditional systems.

- Hotspot Management: AI accelerators create thermal spikes that require precision cooling.

- Space Constraints: More compute per square foot means more heat in less space.

- Airflow Limitations: Traditional methods fail to remove heat quickly enough.

- Energy Overhead: Cooling dense AI systems can drive up energy costs significantly.

- Scalability: Cooling solutions must grow with hardware and workload expansion.

Effective solutions require advanced liquid cooling, optimized rack designs, intelligent thermal management, and comprehensive infrastructure planning.

Q5: How does cooling efficiency affect Total Cost of Ownership (TCO) in data centers?

Cooling efficiency directly influences both operational and capital expenses:

- Energy Costs: Cooling may consume 30–40% of total energy; efficient systems cut this by up to 50%.

- Facility Costs: Efficient cooling supports denser compute, reducing space and construction expenses.

- Hardware Longevity: Better thermal control extends component life and reduces replacement frequency.

- Prestasi: Avoiding thermal throttling boosts compute output and ROI.

- Maintenance: Efficient, modern systems may reduce servicing needs or avoid outages.

- Growth Flexibility: Scalable cooling means you won’t outgrow your infrastructure.

A high-efficiency system often pays for itself within 3–5 years via energy savings, improved uptime, and extended hardware life.